Table of contents

Preamble

How to online refresh some OLAP tables with minimal interruption to your users ? In other words how to still provide access to old tables while you upload fresh figures and perform as quickly as possible the switch to latest figures ? Question has been asked to me on SQL Server database (Azure SQL Database) and tables are generated by Python Spark scripts running in Azure Databricks.

Most of below techniques are not new and some of them are here for more than multiple decades.

Don’t get me wrong it could be acceptable to refresh your OLAP tables with a downtime of x minutes or even x hours if for example your users are all from the same time zone. You could run your refresh process during their night and nobody will never ever see something down. If you start to have users spread all over the globe then finding a suitable slot to not impact anyone can start to be problematic. It would even be impossible if you have to refresh the figures after a fixed company cut off during daylight of a time zone…

The question has been asked on SQL Server with version 2019 for me:

1> select @@version 2> go ---------------------------------------------------------------------------- Microsoft SQL Server 2019 (RTM-CU15) (KB5008996) - 15.0.4198.2 (X64) Jan 12 2022 22:30:08 Copyright (C) 2019 Microsoft Corporation Developer Edition (64-bit) on Linux (Red Hat Enterprise Linux) <X64> |

Running on my Linux Redhat 7.9 (Maipo) test machine that has 12 cores and 64 GB of memory.

But even if this article has been written with SQL Server most of the techniques can be used on many database flavors ! I will try as much as possible to provide inputs for other database flavors… Feel free to add comment if you’d like to share for a particular database flavor I’ll include it !

Preparation of the test environment

Creation of the test database, login and user:

1> create login test_user with password='test_user',check_policy=off,default_database=test 2> go 1> use test 2> go Changed database context to 'test'. 1> create user test_user for login test_user 2> go 1> grant create table to test_user as dbo 2> go 1> grant alter on schema::dbo to test_user 2> go 1> grant select on schema::dbo to test_user 2> go 1> grant execute on schema::dbo to test_user 2> go 1> grant create procedure to test_user 2> go |

Small confirmation it works:

[mssql@server01 ~]$ sqlcmd -U test_user -P 'test_user' -S server01.domain.com,1443 1> select DB_NAME() 2> go -------------------------------------------------------------------------------------------------------------------------------- test (1 rows affected) |

The script to generate and fill with random data my test table:

use test go drop table test01 go create table test01 ( id int primary key, descr varchar(50) ) go grant select on test01 to test_user go sp_help test01 go declare @id int set @id = 1 while @id <= round(rand()*10000+1000,0) begin insert into test01 values (id, 'Description for for id: ' + cast(@id as varchar(10))) set @id = @id + 1 end go |

Then I needed to generate a bit of workload with few SQL / Shell script. I have started with:

[mssql@server01 ~]$ cat query.sql SET NOCOUNT ON WHILE 1 = 1 BEGIN SELECT * FROM dbo.test01 END |

That I execute with sqlcmd tool:

[mssql@server01 ~]$ cat run_query sqlcmd -S server01.domain.com,1443 -U test_user -P test_user -i query.sql |

But I was not able to stop them easily so decided to use this query.sql:

[mssql@server01 ~]$ cat query.sql SELECT * FROM dbo.test01 |

And this small shell script that stop when a stop flag file is created (touch stop_run_query command)

while ! [ -f stop_run_query ] do sqlcmd -S server01.domain.com,1443 -U test_user -P test_user -i query.sql done |

To simulate loading I execute few of them with:

[mssql@server01 ~]$ for i in {1..6}; do ./run_query > /dev/null & done [1] 4687 [2] 4688 [3] 4689 [4] 4690 [5] 4691 [6] 4692 |

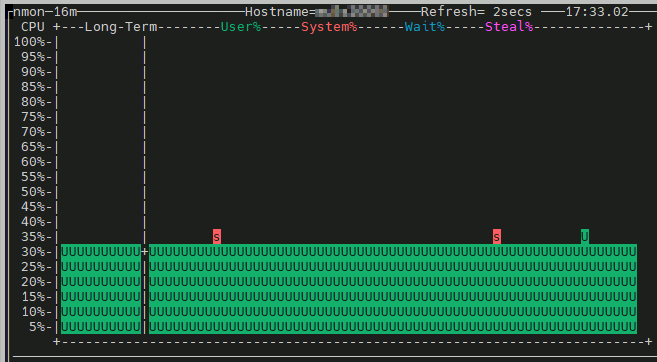

This keeps my test server pretty busy (nmon monitoring tool output):

The old legacy technique

As I have written this technique is even more advanced than simply truncating (or deleting) the table and refresh it with latest figures which is clearly creating a downtime if users try to access figures in the middle of your process…

set nocount on drop table if exists test01_new create table dbo.test01_new ( id int primary key, descr varchar(50) ) print N'Inserting in _new table' declare @id int set @id = 1 while @id <= round(rand()*10000+1000,0) begin insert into test01_new values (@id, 'Description for for id: ' + cast(@id as varchar(10))) set @id = @id + 1 end begin transaction print N'Switching current with _previous' drop table if exists dbo.test01_previous exec sp_rename N'dbo.test01', N'test01_previous' print N'Switching _new with current' exec sp_rename N'dbo.test01_new', N'test01' commit transaction |

I have put the script in a file (rename.sql) and executed it with:

[mssql@server01 ~]$ sqlcmd -U test_user -P 'test_user' -S server01.domain.com,1443 -i "rename.sql" Inserting in _new table Switching current with _previous Caution: Changing any part of an object name could break scripts and stored procedures. Switching _new with current Caution: Changing any part of an object name could break scripts and stored procedures. |

This does not kill the running session and only few users might get an error message but the switch is a matter of few milliseconds…

This technique can be used on almost all database flavors:

- RENAME departments_new TO emp_departments; with Oracle

- RENAME TABLE[S] [IF EXISTS] tbl_name [WAIT n | NOWAIT] TO new_tbl_name; with MariaDB

The alter table switch technique

This method is using the ALTER TABLE SWITCH command to switch (metadata only) the content of your reporting table. This command is a pure SQL Server one and cannot be used, as is, in any other database flavor (as far as I am aware of):

create or alter procedure refresh_switch_test01 as begin set nocount on print N'Creating _new and _keep tables'' skeleton' drop table if exists test01_new drop table if exists test01_keep create table dbo.test01_new ( id int primary key, descr varchar(50) ) create table dbo.test01_keep ( id int primary key, descr varchar(50) ) print N'Inserting in _new table' declare @id int set @id = 1 while @id <= round(rand()*10000+1000,0) begin insert into test01_new values (@id, 'Description for for id: ' + cast(@id as varchar(10))) set @id = @id + 1 end print N'Switching current with _keep and _new with current' begin transaction; alter table dbo.test01 switch to dbo.test01_keep with (wait_at_low_priority (max_duration = 1 minutes, abort_after_wait = blockers)); alter table dbo.test01_new switch to dbo.test01; commit transaction; end |

From official SQL Server documentation:

WAIT_AT_LOW_PRIORITY

Applies to: SQL Server (SQL Server 2014 (12.x) and later) and Azure SQL Database.An online index rebuild has to wait for blocking operations on this table. WAIT_AT_LOW_PRIORITY indicates that the online index rebuild operation waits for low-priority locks, allowing other operations to carry on while the online index build operation is waiting. Omitting the WAIT AT LOW PRIORITY option is the same as WAIT_AT_LOW_PRIORITY ( MAX_DURATION = 0 minutes, ABORT_AFTER_WAIT = NONE).

MAX_DURATION = time [MINUTES ]

Applies to: SQL Server (SQL Server 2014 (12.x) and later) and Azure SQL Database.The wait time, which is an integer value specified in minutes, that the SWITCH or online index rebuild locks wait with low priority when running the DDL command. If the operation is blocked for the MAX_DURATION time, one of the ABORT_AFTER_WAIT actions will run. MAX_DURATION time is always in minutes, and you can omit the word MINUTES.

ABORT_AFTER_WAIT = [NONE | SELF | BLOCKERS } ]

Applies to: SQL Server (SQL Server 2014 (12.x) and later) and Azure SQL Database.NONE

Continue waiting for the lock with normal (regular) priority.SELF

Exit the SWITCH or online index rebuild DDL operation currently being run without taking any action.BLOCKERS

Kill all user transactions that currently block the SWITCH or online index rebuild DDL operation so that the operation can continue.Requires ALTER ANY CONNECTION permission.

Required additional grants:

1> use test 2> go Changed database context to 'test'. 1> grant execute on refresh_switch_test01 to test_user 2> go 1> grant alter any connection to test_user 2> go |

Run it:

[mssql@server01 ~]$ sqlcmd -U test_user -P 'test_user' -S server01.domain.com,1443 -Q "execute refresh_switch_test01" Creating _new and _keep tables' skeleton Inserting in _new table Switching current with _keep and _new with current |

It kills all existing running session selecting from this table and you would have to renew them:

[mssql@server01 ~]$ Sqlcmd: Error: Internal error at ReadAndHandleColumnData (Reason: Error reading column data). Sqlcmd: Error: Internal error at ReadAndHandleColumnData (Reason: Error reading column data). Sqlcmd: Error: Internal error at ReadAndHandleColumnData (Reason: Error reading column data). Sqlcmd: Error: Internal error at ReadAndHandleColumnData (Reason: Error reading column data). Sqlcmd: Error: Internal error at ReadAndHandleColumnData (Reason: Error reading column data). [1] Exit 1 ./run_query > /dev/null [2] Exit 1 ./run_query > /dev/null [3] Exit 1 ./run_query > /dev/null [4] Exit 1 ./run_query > /dev/null [5]- Exit 1 ./run_query > /dev/null [6]+ Exit 1 ./run_query > /dev/null |

With Oracle and MariaDB the similar equivalent approach would be EXCHANGE PARTITION technique:

ALTER TABLE tbl_name EXCHANGE PARTITION part_name WITH TABLE daily_figures WITHOUT VALIDATION; |

ALTER [ONLINE] [IGNORE] TABLE [IF EXISTS] tbl_name [WAIT n | NOWAIT] EXCHANGE PARTITION partition_name WITH TABLE tbl_name |

But, with Oracle, you must purchase the expensive partitioning option of Oracle Enterprise Edition…

The view technique

The principle is a simple create (or alter) view when the newly processed table is ready. In my example I create the new table appending the current time to its name and when this table is ready I recreate the view based on it.

create or alter procedure refresh_view_test01 as begin set nocount on declare @tblname varchar(30) set @tblname='test01_' + format(getdate(), 'yyyyMMddHHmmss') print N'Creating ' + @tblname + ' table' declare @dynamicsql nvarchar(1000) set @dynamicsql = N'drop table if exists ' + @tblname exec(@dynamicsql) print @dynamicsql set @dynamicsql = N'create table ' + @tblname + ' (id int primary key, descr varchar(50))' --create or alter view ' + @tblname + ' as select * from test01' exec(@dynamicsql) print @dynamicsql print N'Inserting in ' + @tblname + ' table' declare @id int set @id = 1 while @id <= round(rand()*10000+1000,0) begin set @dynamicsql = N'insert into ' + @tblname + ' values (' + cast(@id as varchar(10)) + ', ''Description for for id: '' + cast(' + cast(@id as varchar(10)) + ' as varchar(10)))' exec(@dynamicsql) set @id = @id + 1 end print N'Switching view to point on ' + @tblname + ' table' set @dynamicsql = N'create or alter view test01_vw as select * from ' + @tblname exec(@dynamicsql) print @dynamicsql end |

I have given below additional grant to be able to use the new procedure with test_user:

1> use test 2> go Changed database context to 'test'. 1> grant create view to test_user 2> go 1> grant execute on refresh_view_test01 to test_user 2> go |

To refresh the underlining table:

[mssql@server01 ~]$ sqlcmd -U test_user -P 'test_user' -S server01.domain.com,1443 -Q "execute refresh_view_test01" Creating test01_20220411162401 table drop table if exists test01_20220411162401 create table test01_20220411162401 (id int primary key, descr varchar(50)) Inserting in test01_20220411162401 table Switching view to point on test01_20220411162401 table create or alter view test01_vw as select * from test01_20220411162401 |

What’s really cool with this technique is that it does not even kill the running process and they all take into account the new view definition when the current select is over !

This technique can also be used whatever database engine you have chosen and is so really generic !

The synonym technique

This technique is almost equivalent to the view one. Here instead of creating a view we create a synonym on the newly processed tabler. With SQL server the CREATE SYNONYM does not allow to ALTER it if already existing… As we are obliged to drop the synonym I was not sure if privileges would be missing afterwards but it worked without re-granting:

create or alter procedure refresh_synonym_test01 as begin set nocount on declare @tblname varchar(30) set @tblname='test01_' + format(getdate(), 'yyyyMMddHHmmss') print N'Creating ' + @tblname + ' table' declare @dynamicsql nvarchar(1000) set @dynamicsql = N'drop table if exists ' + @tblname exec(@dynamicsql) print @dynamicsql set @dynamicsql = N'create table ' + @tblname + ' (id int primary key, descr varchar(50))' --create or alter view ' + @tblname + ' as select * from test01' exec(@dynamicsql) print @dynamicsql print N'Inserting in ' + @tblname + ' table' declare @id int set @id = 1 while @id <= round(rand()*10000+1000,0) begin set @dynamicsql = N'insert into ' + @tblname + ' values (' + cast(@id as varchar(10)) + ', ''Description for for id: '' + cast(' + cast(@id as varchar(10)) + ' as varchar(10)))' exec(@dynamicsql) set @id = @id + 1 end print N'Switching synonym to point on ' + @tblname + ' table' drop synonym if exists test01_syn set @dynamicsql = N'create synonym test01_syn for ' + @tblname exec(@dynamicsql) print @dynamicsql end |

Additional grants I have given:

1> use test 2> go Changed database context to 'test'. 1> grant execute on refresh_synonym_test01 to test_user 2> go 1> grant create synonym to test_user 2> go |

Simply execute with something like:

[mssql@server01 ~]$ sqlcmd -U test_user -P 'test_user' -S server01.domain.com,1443 -Q "execute refresh_synonym_test01" Creating test01_20220411162208 table drop table if exists test01_20220411162208 create table test01_20220411162208 (id int primary key, descr varchar(50)) Inserting in test01_20220411162208 table Switching synonym to point on test01_20220411162208 table create synonym test01_syn for test01_20220411162208 |

And it did not even impact the running sqlcmd using my run_query shell script…

Same as the view technique this one is also really generic and can be used on almost all database engine…

The schema transfer technique

This technique is purely SQL Server or requires at least the schema principle that Oracle and MariaDB does not have at least. You process the figures in a different schema and when the table is ready you transfer it to the main schema.

I start with new schema creation with sa account and few grants that are maybe too much but I’m not a SQL Server expert:

1> use test 2> go Changed database context to 'test'. 1> create schema processing authorization dbo 2> go 1> create schema previous authorization dbo 2> go 1> grant control on schema::previous to test_user 2> go 1> grant control on schema::processing to test_user 2> go 1> grant control on schema::dbo to test_user 2> go |

I have built below procedure:

create or alter procedure refresh_schema_test01 as begin set nocount on print N'Creating processing.test01 table' drop table if exists processing.test01 create table processing.test01(id int primary key, descr varchar(50)) print N'Inserting in processing.test01 table' declare @id int set @id = 1 while @id <= round(rand()*10000+1000,0) begin insert into processing.test01 values (@id, 'Description for for id: ' + cast(@id as varchar(10))) set @id = @id + 1 end print N'Switching schema to point on processing.test01 table' begin transaction drop table if exists previous.test01 alter schema previous transfer dbo.test01 drop table if exists dbo.test01 alter schema dbo transfer processing.test01 commit transaction end |

The execution is:

[mssql@server01 ~]$ sqlcmd -U test_user -P 'test_user' -S server01.domain.com,1443 -Q "execute refresh_schema_test01" Creating processing.test01 table Inserting in processing.test01 table Switching schema to point on processing.test01 table |

Even if I was not expected it the transfer table has not killed the running sessions. I might have had few queries cancelled but overall you don’t need to re-connect to benefit from the refreshed transferred table…

Conclusion

Even if I have seen multiple blog posts with the newest ALTER TABLE SWITCH overall this is the most complex to implement and the “legacy” old techniques using synonyms or views are working more than well.

With SQL Server the schema technique can also be used and is also pretty simple to implement.

Overall my message here is keep it simple and with low effort you can refresh your tables in background and drastically reduce the downtime for your reporting users !

References

- Refreshing SQL Server Tables With Less Interruption Using Partition Switching

- SQL Server : Implement Large, Fast, Updatable Tables for Responsive Real-Time Reporting

- Why You Should Switch in Staging Tables Instead of Renaming Them (Dear SQL DBA Episode 26)

- How to use switching to make metadata changes online

- SQL SERVER HEKATON – WAIT_AT_LOW_PRIORITY