Table of contents

JDBC client high availability features

You might have build high level architecture to make your Oracle databases highly available. The two main options are:

- Using an Operating System cluster (Veritas, Pacemaker,…) and make your database highly available in an active/passive configuration sometimes switching from one data center to another one physically independent.

- Using Real Application Cluster (RAC) with sometimes a Data Guard (DG) configuration to try to follow Oracle Maximum Availability Architecture (MAA).

But when done are you sure that your developers have implemented all what they could in their Java code to benefit from this ? Have you never heard that they need to shutdown and restart their application when you have switched your Veritas cluster to passive node ? The Oracle features for Java high available are, some are new and some are here for quite a long time:

- Transparent Application Failover (TAF):

Transparent Application Failover (TAF) is a feature of the Java Database Connectivity (JDBC) Oracle Call Interface (OCI) driver. It enables the application to automatically reconnect to a database, if the database instance to which the connection is made fails. In this case, the active transactions roll back.

- Fast Application Notification (FAN):

The Oracle RAC Fast Application Notification (FAN) feature provides a simplified API for accessing FAN events through a callback mechanism. This mechanism enables third-party drivers, connection pools, and containers to subscribe, receive and process FAN events. These APIs are referred to as Oracle RAC FAN APIs in this appendix.

The Oracle RAC FAN APIs provide FAN event notification for developing more responsive applications that can take full advantage of Oracle Database HA features. If you do not want to use Universal

Connection Pool, but want to work with FAN events implementing your own connection pool, then you should use Oracle RAC Fast Application Notification. - Fast Connection Failover (FCF):

The Fast Connection Failover (FCF) feature is a Fast Application Notification (FAN) client implemented through the connection pool. The feature requires the use of an Oracle JDBC driver and an Oracle RAC database or an Oracle Restart on a single instance database.

- Transaction Guard (TG):

Transaction Guard for Java provides transaction idempotence, that is, every transaction has at-most-once execution that prevents applications from submitting duplicate transactions

- Application Continuity (AC):

Oracle Database 12c Release 1 (12.1) introduced the Application Continuity feature that masks database outages to the application and end users are not exposed to such outages.

For definition of Application Continuity / Transaction Guard please check Oracle Application Continuity page. In short:

- Application Continuity (AC) hide database outages from end users and applications by auto-recovering running session, only a delay from application might be seen.

- Transaction Guard (TG) ensure a transaction has been at-most-once executed, occur also in case the session has been unexpectedly ended.

Note that few restrictions apply:

- Oracle recommends not to use TAF and Fast Connection Failover in the same application.

- For Application Continuity feature you must use Transaction Guard 12.2.

- Application Continuity is a feature of the Oracle JDBC Thin driver and is not supported by JDBC OCI driver.

In a previous post I have build a small Oracle 12cR2 database cluster between two virtual machines using a Linux free tool called Pacemaker. As you might guess the idea now is to see how a Java application can benefit from this cluster. But to really benefit from all Java high availability option this entry solution might not be enough and you might need to build an expensive and way more complex RAC cluster.

Most of our production databases are using a premier class cluster call Veritas Cluster Server (VCS) which is (I suppose) a higher level of expertise than my simple Pacemaker one. But whatever the idea remains the same: switch my database to one of the remaining node of my cluster. In many case when we switch the primary node, either to test it or really because we have an outage.

Testing has been done using two virtual machine running Oracle Database 12c Enterprise Edition Release 12.2.0.1.0 – 64bit Production under Oracle Linux Server release 7.4. The two nodes cluster is made of:

- server2.domain.com

- server3.domain.com

The two node have also been used to build a RAC cluster.

The client is a Java program running under Eclipse Oxygen.2 Release (4.7.2). For JDBC OCI driver the Oracle client installed is also 12.2.0.1.0 64 bits.

Which JDBC driver to choose ?

There is a nice page in official documentation called Feature Differences Between JDBC OCI and Thin Drivers that display the key features differences between the two client side available drivers (here is an hard copy for easy reference of this post):

| JDBC OCI Driver | JDBC Thin Driver |

|---|---|

| OCI connection pooling | N/A |

| N/A | Default support for Native XA |

| Transparent Application Failover (TAF) | N/A |

| OCI Client Result Cache | N/A |

| N/A | Application Continuity |

| N/A | Transaction Guard |

| N/A | Support for row count per iteration for array DML |

| N/A | SHA-2 Support in Oracle Advanced Security |

| oraaccess.xml configuration file settings | N/A |

| N/A | Oracle Advanced Queuing |

| N/A | Continuous Query Notification |

| N/A | Support for the O7L_MR client ability |

| N/A | Support for promoting a local transaction to a global transaction |

As we can see it is not super easy to choose one as you have to make a choice between very interesting mutually exclusive features like OCI connection pooling / Transparent Application Failover (TAF) / OCI Client Result Cache and Application Continuity / Transaction Guard…

The JDBC Thin driver is a simple jar file that you add in your CLASSPATH when executing your Java program, the number in ojdbc

| Oracle Database version | JDBC version |

|---|---|

| 12.2 or 12cR2 | JDBC 4.2 in ojdbc8.jar |

| 12.1 or 12cR1 | JDBC 4.1 in ojdbc7.jar JDBC 4.0 in ojdbc6.jar |

| 11.2 or 11gR2 | JDBC 4.0 in ojdbc6.jar JDBC 3.0 in ojdbc5.jar |

The JDBC OCI driver comes with a complete client installation or, starting with 10.1.0, with the OCI Instant Client feature, an option of the instant client installation. The JDBC OCI driver is located in $ORACLE_HOME/bin directory on Windows and $ORACLE_HOME/lib directory on Unix-like. File called ocijdbc

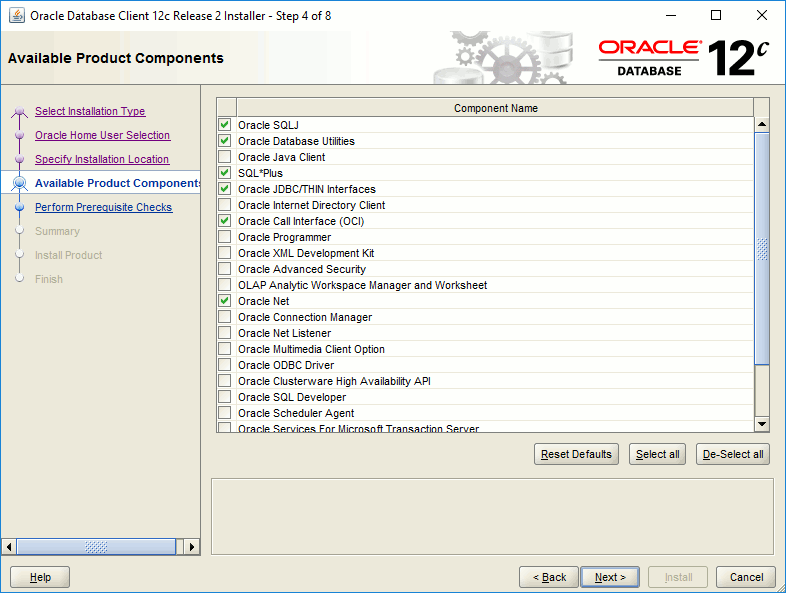

To have the JDBC OCI driver installed you can choose custom installation and select Oracle Call Interface (OCI) in available option:

Real Application Cluster (RAC) with VirtualVox

VirtualBox configuration

There are a lot of articles on Internet on how to create a RAC cluster within VirtualBox. Overall it is not that complex till you have understood the VirutalBox shared storage capability of disk and understood how to configure the different network cards by virtual machine.

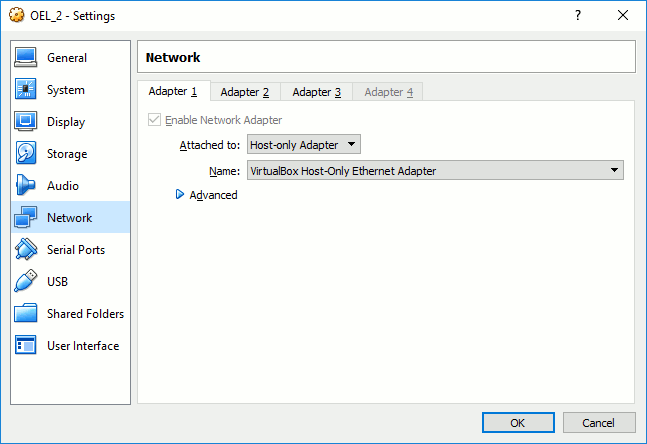

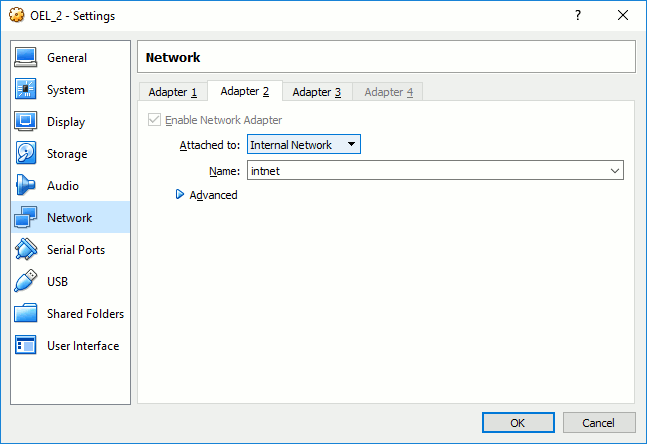

You need three network cars for each VM:

- One for public and administrative (system team) connection (Host-only Adapter)

- One for private cluster interconnect (Internal Network)

- One for Internet access s you will surely need to install few packages (Bridged Adapter)

From VM it gives:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 08:00:27:4e:19:d5 brd ff:ff:ff:ff:ff:ff inet 192.168.56.102/24 brd 192.168.56.255 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.56.112/24 brd 192.168.56.255 scope global secondary eth0:1 valid_lft forever preferred_lft forever inet 192.168.56.121/24 brd 192.168.56.255 scope global secondary eth0:2 valid_lft forever preferred_lft forever inet6 fe80::a00:27ff:fe4e:19d5/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 08:00:27:9b:fd:a4 brd ff:ff:ff:ff:ff:ff inet 192.168.1.102/24 brd 192.168.1.255 scope global eth1 valid_lft forever preferred_lft forever inet 169.254.209.44/16 brd 169.254.255.255 scope global eth1:1 valid_lft forever preferred_lft forever inet6 fe80::a00:27ff:fe9b:fda4/64 scope link valid_lft forever preferred_lft forever 4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 08:00:27:15:09:4b brd ff:ff:ff:ff:ff:ff inet 10.70.101.71/24 brd 10.70.101.255 scope global dynamic eth2 valid_lft 3590sec preferred_lft 3590sec inet6 fe80::a00:27ff:fe15:94b/64 scope link valid_lft forever preferred_lft forever |

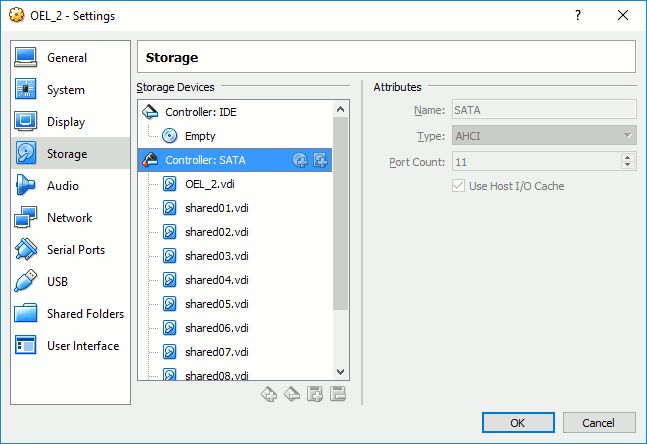

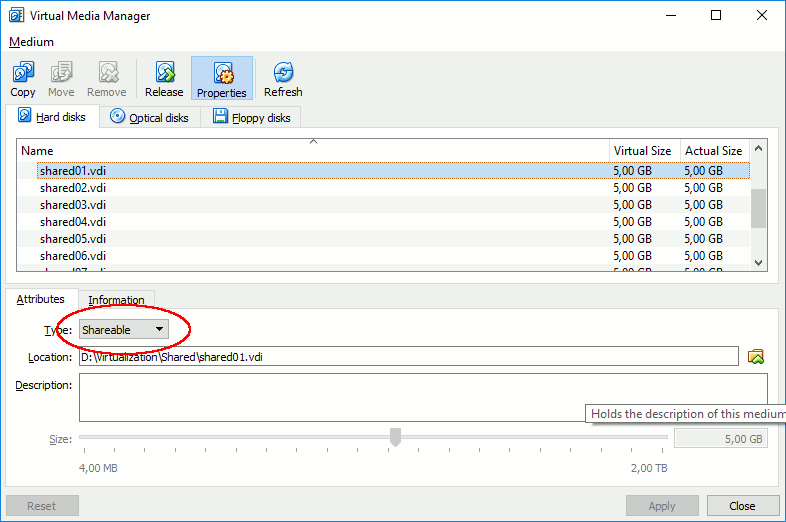

You need shared storage between your Virtual Machines (VM):

Local DNS with dnsmasq

One point that has always been an issue for me is the SCAN name that should be resolved by a DNS server and point onto three different IP. Fortunately I have seen many blog posts mentioning a lightweight DNS server call dnsmasq.

The IP addresses I would like to configure are:

[root@server2 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.56.102 server2 server2.domain.com 192.168.56.103 server3 server3.domain.com 192.168.1.102 server2-priv server2-priv.domain.com 192.168.1.103 server3-priv server3-priv.domain.com 192.168.56.112 server2-vip server2-vip.domain.com 192.168.56.113 server3-vip server3-vip.domain.com 192.168.56.121 rac-cluster-scan rac-cluster-scan.domain.com 192.168.56.122 rac-cluster-scan rac-cluster-scan.domain.com 192.168.56.123 rac-cluster-scan rac-cluster-scan.domain.com |

In dnsmasq configuration file (/etc/dnsmasq.conf) I have commented out only one parameter to match my local domain:

local=/domain.com/ |

In /etc/resolf.conf file, to make it persistent across reboot the DHCP third network car should be de-activated:

[root@server2 ~]# cat /etc/resolv.conf ; generated by /usr/sbin/dhclient-script search domain.com nameserver 192.168.56.102 nameserver 164.129.154.205 nameserver 10.129.252.253 |

If you want to keep an Internet access on your virtual machine you can use below nice trick to avoid /etc/resolv.conf being modified by DHCP client:

[root@server4 oracle]# chattr +i /etc/resolv.conf |

Enable and start dnsmasq:

[root@server3 ~]# systemctl enable dnsmasq Created symlink from /etc/systemd/system/multi-user.target.wants/dnsmasq.service to /usr/lib/systemd/system/dnsmasq.service. [root@server3 ~]# systemctl start dnsmasq [root@server3 ~]# systemctl status dnsmasq ● dnsmasq.service - DNS caching server. Loaded: loaded (/usr/lib/systemd/system/dnsmasq.service; enabled; vendor preset: disabled) Active: active (running) since Fri 2018-02-09 16:11:02 CET; 25min ago Main PID: 6338 (dnsmasq) CGroup: /system.slice/dnsmasq.service └─6338 /usr/sbin/dnsmasq -k Feb 09 16:11:02 server3.domain.com systemd[1]: Starting DNS caching server.... Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: started, version 2.76 cachesize 150 Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: compile time options: IPv6 GNU-getopt DBus no-i18n IDN DHCP DHCPv6 no-Lua TFTP no-conntrack ipset auth no-DNSSEC loop-detect inotify Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: using local addresses only for domain domain.com Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: reading /etc/resolv.conf Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: using local addresses only for domain domain.com Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: ignoring nameserver 192.168.56.103 - local interface Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: using nameserver 164.129.154.205#53 Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: using nameserver 10.129.252.253#53 Feb 09 16:11:02 server3.domain.com dnsmasq[6338]: read /etc/hosts - 9 addresses |

It says it is ignoring local nameserver but if you do not add it then it does not work…

And now magically you can nslookup your SCAN cluster name that are round robin displaying your three IP addressees:

[root@server3 ~]# nslookup rac-cluster-scan Server: 192.168.56.103 Address: 192.168.56.103#53 Name: rac-cluster-scan.domain.com Address: 192.168.56.121 Name: rac-cluster-scan.domain.com Address: 192.168.56.122 Name: rac-cluster-scan.domain.com Address: 192.168.56.123 |

The installation of Grid infrastructure should work great. The nasty thing I have discovered with Grid 12cR2 is that the zip file you download is directly the binaries and should be unzip in your final target directory, not anymore in a temporary folder. This will, apparently, be also the case for Oracle software starting with 18c…

References

- JDBC Driver & UCP Downloads

- Oracle Database 12.2.0.1 JDBC Driver & UCP Downloads

- Oracle JDBC FAQ

- JDBC Developer’s Guide

- Starting With Oracle JDBC Drivers – Installation, Certification, and More! (Doc ID 401934.1)

- Understanding Transparent Application Failover (TAF) and Fast Connection Failover (FCF) (Doc ID 334471.1)

- How to Verify Universal Connection Pool (UCP) / Fast Connection Failover (FCF) Setup (Doc ID 1064652.1)

- Master Note for Oracle JDBC High Availability Features (Doc ID 1100024.1)

- Java & JavaScript

- JDBC Learn More

- Oracle Client Failover – Under The Hood

- Client Failover Best Practices for Highly Available Oracle Databases