Table of contents

Preamble

This blog post is about activating Symantec SmartIO for Solid State Drives and use it to cache important figures of an Oracle database. SmartIO feature (part of storage foundation) aim at caching in read or writeback mode I/O perform on files managed by SmartIO cache. This post is a follow up of Fusion I/O installation and benchmarking post.

Writeback mode allows you to return to application once the I/O has been written on flash cache area. Read mode simply put blocks read on disk at first time for second and further faster access, at each write SmartIO synchronize cache area with master disk information.

Even if attractive I have not planned to writeback mode has it deserves more infrastructure to ensure files are not in an inconsistent state in case of crash (cache replica). Second our target database will be using an Operating System cluster (Veritas Cluster Server) so switching from one node to slave one would trigger additional step to ensure all I/Os have been written on disk…

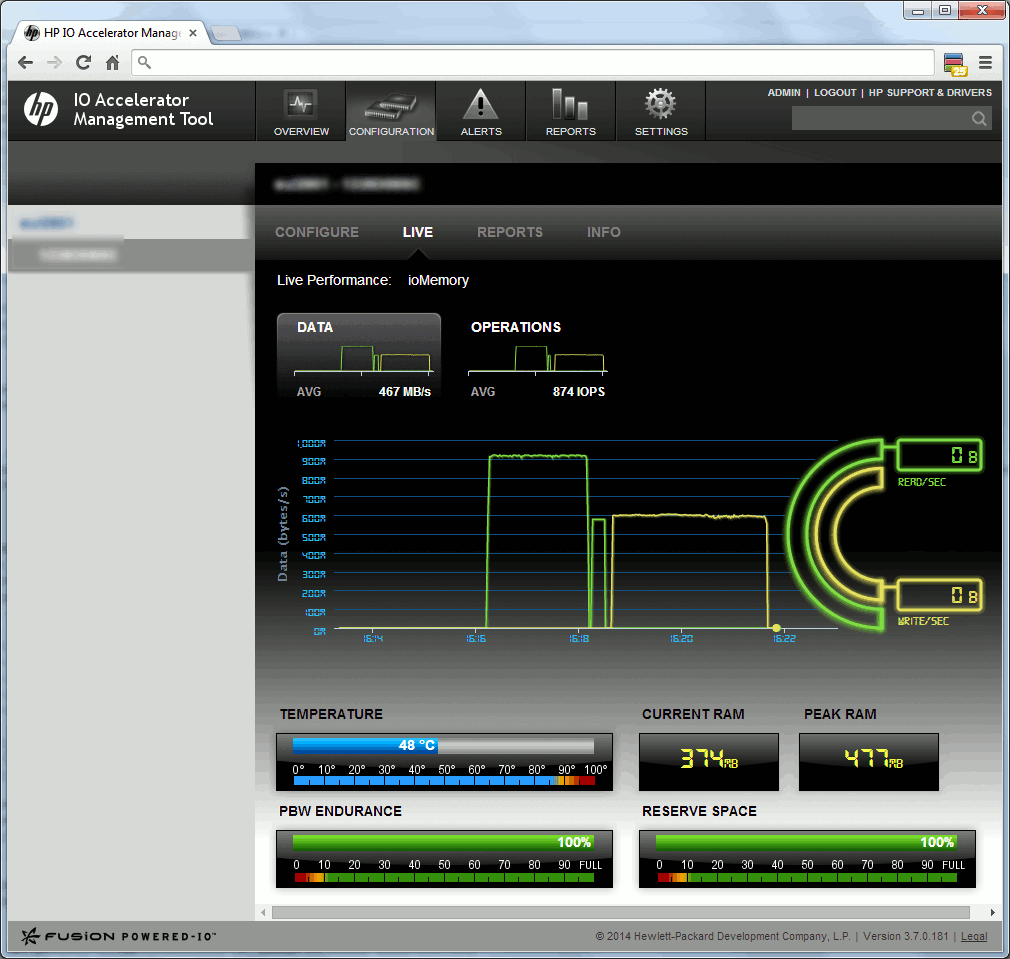

The test server I have used has a fusion I/O card that we have tested in previous blog post and I have an 11.2.0.4 Oracle enterprise edition database. The recommended feature to implement is called SmartIO caching for Oracle databases on VxFS file systems (that has preference on SmartIO caching for databases on VxVM volumes according to Symantec support).

My test have been done using Symantec Storage Foundation 6.1 running on Red Hat Enterprise Linux Server release 6.4 (Santiago).

SmartIO configuration

Start by adding in /etc/sudoers (visudo) the following line to give access to fscache binary to your Oracle Linux account (oradwh for me):

oradwh ALL=(ALL) NOPASSWD: /opt/VRTS/bin/fscache |

Remark

The fscache is not a typo mistake 🙂

I’m starting from a Veritas VxVM device group created on the fusion I/O card. Just to confirm there is nothing yet configured:

[root@server1 ~]# sfcache list NAME TYPE SIZE ASSOC-TYPE STATE DEVICE |

Now we need to create the cache area, I started by providing directly the device group:

[root@server1 ~]# sfcache create --noauto fusionio SFCache ERROR V-5-2-6281 No valid disk(s) provided to create cache area [root@server1 ~]# vxdg list NAME STATE ID vgp3801 enabled,cds 1404731405.184.server1 fusionio enabled,cds 1405514597.10441.server1 |

But realized I had to provide a VxVM device group and a logical volume created on it:

[root@server1 ~]# vxassist -g fusionio maxsize Maximum volume size: 712802304 (348048Mb) [root@server1 ~]# vxassist -g fusionio make lvol01 712802304 [root@server1 ~]# vxassist -g fusionio maxsize VxVM vxassist ERROR V-5-1-15809 No free space remaining in diskgroup fusionio with given constraints |

Second trial:

[root@server1 ~]# sfcache create --noauto fusionio/lvol01 SFCache ERROR V-5-2-0 Caching is not applicable for specified volume as DG version should be greater than 180 |

I have to upgrade the device group version:

[root@server1 ~]# vxdg -q list fusionio |grep version version: 180 [root@server1 ~]# vxdg upgrade fusionio [root@server1 ~]# vxdg -q list fusionio |grep version version: 190 |

Third trial:

[root@server1 ~]# sfcache create -t vxfs --noauto fusionio/lvol01 [root@server1 ~]# sfcache list NAME TYPE SIZE ASSOC-TYPE STATE DEVICE fusionio/lvol01 VxFS 339.89g NOAUTO ONLINE fusionio0_0 [root@server1 ~]# sfcache list -l Cachearea: fusionio/lvol01 Assoc Type: NOAUTO Type: VxFS Size: 339.89g State: ONLINE FSUUID SIZE MODE MOUNTPOINT 000000000000000000000000000000000000000000000000 0 KB nocache /ora_dwh 4edee453af0c0f0064af0000cda6e12ee70300000b82ba53 0 KB nocache /ora_dwh/arch 92dee453d3c40b00feaf0000e3077658e70300001283ba53 0 KB nocache /ora_dwh/ctrl 99dee4531a54070006b0000052fc9e5ae70300002c83ba53 0 KB nocache /ora_dwh/data02 9edee4536a2306000ab000008b76b35be70300002e83ba53 0 KB nocache /ora_dwh/data03 a0dee4539a8c08000eb00000c1f0c75ce70300003083ba53 0 KB nocache /ora_dwh/data04 a4dee453da5e000012b00000f96adc5de70300001482ba53 0 KB nocache /ora_dwh/dump a8dee4539ab0020016b0000031e5f05ee70300003c83ba53 0 KB nocache /ora_dwh/index01 aadee45331eb02001ab00000675f0560e70300004283ba53 0 KB nocache /ora_dwh/index02 acdee453b90a01001eb000009dd91961e70300004583ba53 0 KB nocache /ora_dwh/index03 aedee4538983030022b00000d3532e62e70300004983ba53 0 KB nocache /ora_dwh/index04 b2dee45389fa040026b000000bce4263e70300001c82ba53 0 KB nocache /ora_dwh/rbs b8dee453a1550a002bb00000d2669c64e70300001d82ba53 0 KB nocache /ora_dwh/software bcdee45318b30c002fb000000ae1b065e70300005783ba53 0 KB nocache /ora_dwh/sys c0dee4536066020033b00000425bc566e70300005d83ba53 0 KB nocache /ora_dwh/temp01 c3dee453185a060037b0000079d5d967e70300005f83ba53 0 KB nocache /ora_dwh/temp02 c7dee45300d507003db00000cb8c7869e703000000fac853 0 KB nocache /ora_dwh/data_tmp fbdbe45314380d00d2ad000010ab5742e7030000f882ba53 0 KB nocache /ora_dwh/log eba6e4535d5d0b004f740000d96e2b3be70300002983ba53 0 KB nocache /ora_dwh/data01 |

No interesting statistics so far:

[root@server1 ~]# sfcache stat fusionio/lvol01 TYPE: VxFS NAME: fusionio/lvol01 Cache Size: 339.9 GB Cache Utilization: 94.5 MB ( 0.03 %) File Systems Using Cache: 0 Writeback Cache Use Limit: Unlimited Writeback Flush Timelag: 10 s Read Cache Writeback Hit Ratio Data Read Data Written Hit Ratio Data Written Total: 0.00 % 0 KB 0 KB 0.00 % 0 KB |

Oracle configuration

My Oracle Linux account (oradwh) has its environment well set up:

[oradwh@server1 ~]$ env |grep ORA ORACLE_SID=dwh ORACLE_BASE=/ora_dwh ORACLE_HOME=/ora_dwh/software |

So issuing the documented command:

[oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o setdefaults --type=olap INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO INFO: Setting olap policies INFO: Setting nocache mode to /ora_dwh/log/dwh/redo01.log UX:vxfs fscache: ERROR: V-3-28050: Failed to set caching mode to /ora_dwh/log/dwh/redo01.log: Inappropriate ioctl for device SFCache ERROR V-5-2-0 Unknown Error. Please check logs at /etc/vx/log/sfcache.log . |

The sfcache.log file is not helping at all…

Investigating why the command is failing (with the help of Symantec support) I tried to set the VxFS option to an Oracle mount point and got below error:

[root@server1 ~]# mount -t vxfs -o remount,smartiomode=read /dev/vx/dsk/vgp3801/lvol13 /ora_dwh/log UX:vxfs mount.vxfs: ERROR: V-3-28217: smartiomode=read mount option supported only on layout versions >= 10. |

So tried to upgrade the VxFS layout:

[root@server1 ~]# /opt/VRTS/bin/fstyp -v /dev/vx/dsk/vgp3801/lvol13 | grep version magic a501fcf5 version 9 ctime Mon 07 Jul 2014 01:22:32 PM CEST [root@server1 ~]# /opt/VRTS/bin/vxupgrade UX:vxfs vxupgrade: INFO: V-3-22568: usage: vxupgrade [-n new_version] [-r rawdev] mount_point [root@server1 ~]# /opt/VRTS/bin/vxupgrade -n 10 /ora_dwh/log [root@server1 ~]# /opt/VRTS/bin/fstyp -v /dev/vx/dsk/vgp3801/lvol13 | grep version magic a501fcf5 version 10 ctime Mon 07 Jul 2014 01:22:32 PM CEST |

Now all is working fine:

[root@server1 ~]# mount -t vxfs -o remount,smartiomode=read /dev/vx/dsk/vgp3801/lvol13 /ora_dwh/log [root@server1 ~]# mount | grep /ora_dwh/log /dev/vx/dsk/vgp3801/lvol13 on /ora_dwh/log type vxfs (rw,delaylog,largefiles,ioerror=mwdisable,smartiomode=read) |

Now the command is going a bit further but still failing for:

[oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o setdefaults --type=olap INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO INFO: Setting olap policies INFO: Setting nocache mode to /ora_dwh/log/dwh/redo01.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo02.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo03.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo04.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo05.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo06.log INFO: Setting nocache mode to /ora_dwh/software/dbs/arch UX:vxfs fscache: ERROR: V-3-28032: Failed to stat /ora_dwh/software/dbs/arch: No such file or directory SFCache ERROR V-5-2-0 Unknown Error. Please check logs at /etc/vx/log/sfcache.log . |

Which I corrected, at Oracle level, with (even if my database is in NOARCHIVELOG mode I had to set it):

SQL> ALTER SYSTEM SET log_archive_dest_1='location="/ora_dwh/arch/dwh"' scope=both; SYSTEM altered. |

I had again the same Inappropriate ioctl for device error message for another file system:

[oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o setdefaults --type=olap INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO INFO: Setting olap policies INFO: Setting nocache mode to /ora_dwh/log/dwh/redo01.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo02.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo03.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo04.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo05.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo06.log INFO: Setting nocache mode to /ora_dwh/arch/dwh INFO: Setting read mode to /ora_dwh/temp01/dwh/temp01.dbf UX:vxfs fscache: ERROR: V-3-28050: Failed to set caching mode to /ora_dwh/temp01/dwh/temp01.dbf: Inappropriate ioctl for device SFCache ERROR V-5-2-0 Unknown Error. Please check logs at /etc/vx/log/sfcache.log . |

So decided to upgrade all file systems to layout 10 and finally the command worked fine this time:

[oradwh@server1 dbs]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o setdefaults --type=olap INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO INFO: Setting olap policies INFO: Setting nocache mode to /ora_dwh/log/dwh/redo01.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo02.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo03.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo04.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo05.log INFO: Setting nocache mode to /ora_dwh/log/dwh/redo06.log INFO: Setting nocache mode to /ora_dwh/arch/dwh INFO: Setting read mode to /ora_dwh/temp01/dwh/temp01.dbf INFO: Setting read mode to /ora_dwh/temp02/dwh/temp_mrs02.dbf INFO: Setting read mode to /ora_dwh/temp01/dwh/temp_mrs01.dbf INFO: Setting read mode to /ora_dwh/data01/dwh/hratedata03.dbf INFO: Setting read mode to /ora_dwh/data04/dwh/cvto_data02.dbf INFO: Setting read mode to /ora_dwh/index04/dwh/bckidx05.dbf INFO: Setting read mode to /ora_dwh/data02/dwh/cvto_data01.dbf INFO: Setting read mode to /ora_dwh/index04/dwh/dwhidx04.dbf INFO: Setting read mode to /ora_dwh/data03/dwh/svidata_tt01.dbf INFO: Setting read mode to /ora_dwh/data03/dwh/rbwdata01.dbf INFO: Setting read mode to /ora_dwh/data01/dwh/dwhdata07.dbf INFO: Setting read mode to /ora_dwh/data02/dwh/e2dwhdata05.dbf . . . |

But if you display the attribute for one datafile (or tablespace) where the caching method is supposed to be read you should see nothing:

[oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o list --datafile=/ora_dwh/data01/dwh/users01.dbf INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO FILENAME MODE PINNED CACHE_USED -------- ---- ------ ---------- /ora_dwh/data01/dwh/users01.dbf nocache no 0 KB |

It is simply because we have created the cache are in noauto mode so you need to enable SmartIO for the file system first:

[root@server1 ~]# sfcache enable /ora_dwh/data01 |

Now display is correct and SmartIO read is activated:

[oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o list --datafile=/ora_dwh/data01/dwh/users01.dbf INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO FILENAME MODE PINNED CACHE_USED -------- ---- ------ ---------- /ora_dwh/data01/dwh/users01.dbf read no 0 KB |

Please note that even if I activate it for my redo log folder the redo log files will not be in read cache mode. As expected and set by sfcache app command issued by Oracle Linux account:

[root@server1 ~]# sfcache enable /ora_dwh/log [root@server1 ~]# sfcache list -r /ora_dwh/log /ora_dwh/log: READ CACHE WRITEBACK MODE PINNED NAME 0 KB 0 KB nocache no /ora_dwh/log/dwh/redo01.log 0 KB 0 KB nocache no /ora_dwh/log/dwh/redo02.log 0 KB 0 KB nocache no /ora_dwh/log/dwh/redo03.log 0 KB 0 KB nocache no /ora_dwh/log/dwh/redo04.log 0 KB 0 KB nocache no /ora_dwh/log/dwh/redo05.log 0 KB 0 KB nocache no /ora_dwh/log/dwh/redo06.log 0 KB 0 KB read no /ora_dwh/log/dwh 0 KB 0 KB read no /ora_dwh/log |

What Symantec claim is that you should pin top read datafiles in cache area. By doing this, free space is tablespaces will not be is SmartIO cache area. The datafiles ordered by IOPS can be easily found in an AWR report. Please note than pinning and loading (sfcache pin with -o load option or sfcache load directly) is not suggested as it would also put empty block in SmartIO cache area. As a reference what says official documentation:

- The load operation preloads files into the cache before the I/O accesses the files. The files are already in the cache so that the I/Os return more quickly . By default, the files are loaded in the background. Use the -o sync operation to load the files synchronously , which specifies that the command does not return until all the files are loaded. The files that are loaded in this way are subject to the usual eviction criteria.

- The pin operation prevents the files from being evicted from the cache. You can pin commonly used files so that SmartIO does not evict the files and later need to cache the files again. A pinned file is kept in the cache indefinitely , until it is deleted or explicitly unpinned. If you pin a file with the -o load option, the operation also caches the file contents synchronously . If you do not specify the -o load option, the file contents are cached based on I/O access.

- The unpin operation removes files from the pinned state. The unpin operation does not cause the file to be immediately evicted. SmartIO considers the file for eviction in the same way as any other file, when space is required in the cache.

So I’m pinning in cache the datafile of the tablespace on which I’m planning to do testing:

[oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o pin --datafile=/ora_dwh/data01/dwh/users01.dbf INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO INFO: Setting pin policy to /ora_dwh/data01/dwh/users01.dbf [oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o list --tablespace=USERS INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO FILENAME MODE PINNED CACHE_USED -------- ---- ------ ---------- /ora_dwh/data01/dwh/users01.dbf read yes 0 KB |

Oracle testing

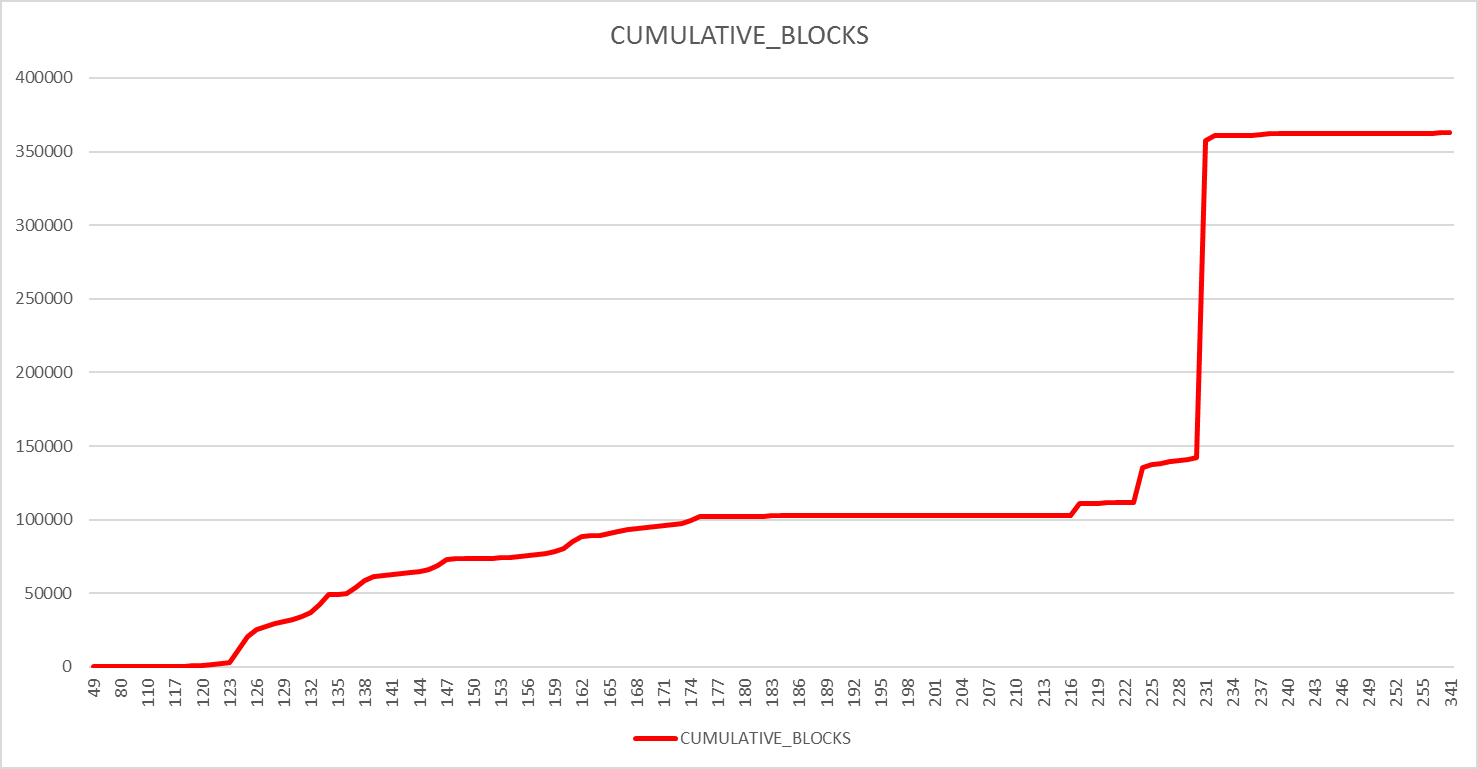

For testing I created in USERS tablespace the same test table I have used for Bind variables peeking and Adaptive Cursor Sharing (ACS) except that I created it with 50,000,000 rows and no index (total size is 3.5 GB). The plan is to do a count(*) on it to perform a Full Table Scan (FTS) and flush the buffer cache before each run to force Oracle to read blocks (so on disk or on flash cache).

Once the table is created I purge the cache area to start with a clean situation:

[root@server1 ~]# sfcache purge /ora_dwh/data01 [root@server1 ~]# sfcache stat -r /ora_dwh/data01 [root@server1 ~]# sfcache stat /ora_dwh/data01 Cache Size: 339.9 GB Cache Utilization: 8 KB ( 0.00 %) Read Cache Hit Ratio Data Read Data Written /ora_dwh/data01: 0.00 % 0 KB 0 KB [root@server1 ~]# sfcache list /ora_dwh/data01 /ora_dwh/data01: READ CACHE WRITEBACK MODE PINNED NAME 0 KB 0 KB read no /ora_dwh/data01/dwh 0 KB 0 KB read no /ora_dwh/data01 [root@server1 ~]# sfcache list /ora_dwh/data01/dwh/users01.dbf /ora_dwh/data01/dwh/users01.dbf: READ CACHE WRITEBACK MODE PINNED 0 KB 0 KB read yes |

Remark:

To bypass Linux file systems caching I have mounted /ora_dwh/data01 using nodatainlog,mincache=direct,convosync=direct options.

Or with Oracle Linux account:

[oradwh@erver1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o list --tablespace=USERS INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO FILENAME MODE PINNED CACHE_USED -------- ---- ------ ---------- /ora_dwh/data01/dwh/users01.dbf read yes 0 KB |

Then I created small below SQL script. The script flushes buffer cache (should not be needed for big table), generates a trace file and perform a FTS on my test table by doing a count(*):

SET autotrace traceonly EXPLAIN STATISTICS ALTER SYSTEM flush buffer_cache; ALTER SESSION SET events '10046 trace name context forever, level 12'; SELECT COUNT(*) FROM test1; |

After first run, needed to load the SmartIO cache area, I got:

[root@server1 ~]# sfcache stat -l /ora_dwh/data01 Cache Size: 339.9 GB Cache Utilization: 8 KB ( 0.00 %) Read Cache Hit Ratio Data Read Data Written Files Cached Files Pinned Data Pinned /ora_dwh/data01: 0.00 % 0 KB 0 KB 0 0 0 KB [root@server1 ~]# sfcache list /ora_dwh/data01/dwh/users01.dbf /ora_dwh/data01/dwh/users01.dbf: READ CACHE WRITEBACK MODE PINNED 3.029 GB 0 KB read yes |

Or with Oracle Linux account:

[oradwh@server1 trace]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o list --tablespace=USERS INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO FILENAME MODE PINNED CACHE_USED -------- ---- ------ ---------- /ora_dwh/data01/dwh/users01.dbf read yes 3.029 GB |

After second run I got (then third run would set the hit ratio to 66% and so on):

[root@server1 ~]# sfcache stat -l /ora_dwh/data01 Cache Size: 339.9 GB Cache Utilization: 3.029 GB ( 0.89 %) Read Cache Hit Ratio Data Read Data Written Files Cached Files Pinned Data Pinned /ora_dwh/data01: 50.01 % 3.03 GB 3.029 GB 34 1 3.029 GB |

I have executed tkprof Oracle tool over two trace files of my two runs and the difference is quite obvious:

First run in 67 seconds (disk access):

OVERALL TOTALS FOR ALL NON-RECURSIVE STATEMENTS call count cpu elapsed disk query current rows ------- ------ -------- ---------- ---------- ---------- ---------- ---------- Parse 5 0.00 0.00 0 0 0 0 Execute 5 0.00 0.00 0 3 3 3 Fetch 4 4.47 27.83 198484 298711 0 10 ------- ------ -------- ---------- ---------- ---------- ---------- ---------- total 14 4.47 27.83 198484 298714 3 13 Misses in library cache during parse: 1 Elapsed times include waiting on following events: Event waited on Times Max. Wait Total Waited ---------------------------------------- Waited ---------- ------------ SQL*Net message to client 8 0.00 0.00 SQL*Net message from client 8 40.05 40.05 Disk file operations I/O 2 0.00 0.00 db file sequential read 7 0.01 0.02 direct path read 3123 0.07 25.24 db file scattered read 1 0.01 0.01 log file sync 1 0.00 0.00 |

Second run in 51 seconds (cache area access but much less I/O latency, around 4 times faster to perform disk access):

OVERALL TOTALS FOR ALL NON-RECURSIVE STATEMENTS call count cpu elapsed disk query current rows ------- ------ -------- ---------- ---------- ---------- ---------- ---------- Parse 5 0.00 0.00 0 0 0 0 Execute 5 0.00 0.00 0 3 3 3 Fetch 4 3.22 7.01 198484 298711 0 10 ------- ------ -------- ---------- ---------- ---------- ---------- ---------- total 14 3.22 7.01 198484 298714 3 13 Misses in library cache during parse: 1 Elapsed times include waiting on following events: Event waited on Times Max. Wait Total Waited ---------------------------------------- Waited ---------- ------------ SQL*Net message to client 8 0.00 0.00 SQL*Net message from client 8 44.31 44.32 Disk file operations I/O 3 0.00 0.00 db file sequential read 7 0.00 0.00 direct path read 3123 0.00 4.05 db file scattered read 1 0.00 0.00 log file sync 1 0.00 0.00 |

How to remove

Simple commands to use to remove cache area:

[root@server1 /]# sfcache delete fusionio/lvol01 SFCache ERROR V-5-2-6240 Cache area is not offline [root@server1 /]# sfcache offline fusionio/lvol01 [root@server1 /]# sfcache delete fusionio/lvol01 |

Issues encountered

Sfcache stat command not returning anything

[oradwh@server1 ~]$ sfcache app cachearea=fusionio/lvol01 oracle -S $ORACLE_SID -H $ORACLE_HOME -o stat INFO: Oracle Instance dwh is runnig INFO: Store DB details at /ora_dwh/sys/dwh/.CACHE_INFO |

But still the stat command is empty:

Symantec support informed me that it is a known bug that will be correct by Storage Foundation 6.1.1. In 6.1.1 the command will still have issue if multiple databases are running on the same server. The complete correction of all bugs is expected to come with a later release…

I have dowloaded and applied the 6.1.1 patch using https://sort.veritas.com/patch/finder but it did not solved the issue (was expected to be honest)… Need to wait for 6.2 or future release…

SmartIO meta data cleaning

If when doing multiple test you got a strange error like:

UX:vxfs fscache: ERROR: V-3-28056: Failed to pin the file /ora_dwh/data01/dwh/users01.dbf to the cache: No such device SFCache ERROR V-5-2-0 Unknown Error. Please check logs at /etc/vx/log/sfcache.log . |

Means SmartIO is taking old configuration history and try to apply them to a newly created one, delete the .CACHE_INFO directory. This folder location is display each time you issue sfcache app command.

Sfcache purge command bug

While performing my tests I used the sfcache purge command to remove cache content of my /ora_dwh/data01 file system. The command further forbid anything to go in cache for the purged file system (!!). To recover the situation I tried many option and I have found that only one working is to offline/online the cache area:

[root@server1 ~]# sfcache offline fusionio/lvol01 [root@server1 ~]# sfcache online fusionio/lvol01 |

SmartIO licensing issue

For further testing I wanted to activate writeback mode but apparently I do now own a vlaid license. The strange thing is that it seems we own a full copy license:

[root@server1 ~]# mount -t vxfs -o smartiomode=writeback /dev/vx/dsk/fusionio/lvol01 /mnt/fioa UX:vxfs mount.vxfs: ERROR: V-3-25255: vxfs mount:SmartIO: You don't have a license to run this program [root@server1 ~]# vxdctl license All features are available: Mirroring Root Mirroring Concatenation Disk-spanning Striping RAID-5 RAID-5 Snapshot VxSmartSync DMP (multipath enabled) CDS Dynamic LUN Expansion Hardware assisted copy DMP Native Support SMARTIO_VMREAD |

Or use vxlicrep. I have not investigated further this point as the feature has no immediate interest…

References

- Error Codes List

- sfcache(1M)

- SFHA Solutions 6.1 (Linux): SmartIO: support for caching on SSD

- man fscache

- Customizing the caching behavior About SmartIO read caching for applications running on VxFS file systems

- Storage Foundation DocCentral