Table of contents

Preamble

Even if the standard tool for your data scientist in an Hortonworks Data Platform (HDP) is Zeppelin Notebook this population would most probably want to use Jupyter Lab/Notebook that has quite a momentum in this domain.

As you might guess with the new Hive Warehouse Connector (HWC) to access Hive tables in Spark comes a bunch of problem to correctly configure Jupyter Lab/Notebook…

In short the idea is to add additional Jupyter kernels on top of the default Python 3 one. To do this either you create them on your own by creating a kernel.json file or installing one of the packages that help you to integrate the language you wish.

In this article I assume that you already have a working Anaconda installation on your server. The installation is pretty straightforward, just execute the Anaconda3-2019.10-Linux-x86_64.sh shell script (in my case) and acknowledge the licence information displayed.

JupyterHub installation

If like me you are behind a corporate proxy the first thing to do is to configure it to be able to download conda packages over Internet:

(base) [root@server ~]# cat .condarc auto_activate_base: false proxy_servers: http: http://account:password@proxy_server.domain.com:proxy_port/ https: http://account:password@proxy_server.domain.com:proxy_port/ ssl_verify: False |

Create the JupyterHub conda environment with (chosen name is totally up to you):

(base) [root@server ~]# conda create --name jupyterhub Collecting package metadata (current_repodata.json): done Solving environment: done ==> WARNING: A newer version of conda exists. <== current version: 4.7.12 latest version: 4.8.2 Please update conda by running $ conda update -n base -c defaults conda ## Package Plan ## environment location: /opt/anaconda3/envs/jupyterhub Proceed ([y]/n)? y Preparing transaction: done Verifying transaction: done Executing transaction: done # # To activate this environment, use # # $ conda activate jupyterhub # # To deactivate an active environment, use # # $ conda deactivate |

If, like me, you received a warning about an obsolete release of conda, upgrade it with:

(base) [root@server ~]# conda update -n base -c defaults conda Collecting package metadata (current_repodata.json): done Solving environment: done ## Package Plan ## environment location: /opt/anaconda3 added / updated specs: - conda The following packages will be downloaded: package | build ---------------------------|----------------- backports.functools_lru_cache-1.6.1| py_0 11 KB conda-4.8.2 | py37_0 2.8 MB future-0.18.2 | py37_0 639 KB ------------------------------------------------------------ Total: 3.5 MB The following packages will be UPDATED: backports.functoo~ 1.5-py_2 --> 1.6.1-py_0 conda 4.7.12-py37_0 --> 4.8.2-py37_0 future 0.17.1-py37_0 --> 0.18.2-py37_0 Proceed ([y]/n)? y Downloading and Extracting Packages future-0.18.2 | 639 KB | ######################################################################################### | 100% backports.functools_ | 11 KB | ######################################################################################### | 100% conda-4.8.2 | 2.8 MB | ######################################################################################### | 100% Preparing transaction: done Verifying transaction: done Executing transaction: done (base) [root@server ~]# conda -V conda 4.8.2 |

Activate your newly created Conda environment and install jupyterhub and notebook inside it:

(base) [root@server ~]# conda activate jupyterhub (jupyterhub) [root@server ~]# conda install -c conda-forge jupyterhub (jupyterhub) [root@server ~]# conda install -c conda-forge notebook |

Remark

It is also possible to install newest JupyterLab in Jupyterhub instead of Jupyter Notebook. If you do so you have to set c.Spawner.default_url = ‘/lab’ to instruct JupyterHub to load JupyterLab instead of Jupyter Notebook. In below I will try to mix screenshot but clearly the future is JupyterLab and not Jupyter Notebook. JupyterHub is just providing a multi user environment.

Install JupyterLab with:

conda install -c conda-forge jupyterlab |

Execute jupyterhub by just typing the command jupyterhub and access to its url at http://server.domain.com:8000. All options can obviously be configured…

As an exemple how to activate https for your Jupyterhub using a self signed certificate (free). Is not optimal but better than http…

Generate the config file using:

(jupyterhub) [root@server ~]# jupyterhub --generate-config -f jupyterhub_config.py Writing default config to: jupyterhub_config.py |

Generate the key and certificate using below command (taken from OpenSSL Cookbook book):

(jupyterhub) [root@server ~]# openssl req -new -newkey rsa:2048 -x509 -nodes -keyout root-ocsp.key -out root-ocsp.csr Generating a RSA private key ...................................+++++ ........................................+++++ writing new private key to 'root-ocsp.key' ----- You are about to be asked to enter information that will be incorporated into your certificate request. What you are about to enter is what is called a Distinguished Name or a DN. There are quite a few fields but you can leave some blank For some fields there will be a default value, If you enter '.', the field will be left blank. ----- Country Name (2 letter code) [AU]:CH State or Province Name (full name) [Some-State]:Geneva Locality Name (eg, city) []:Geneva Organization Name (eg, company) [Internet Widgits Pty Ltd]:Company Name Organizational Unit Name (eg, section) []: Common Name (e.g. server FQDN or YOUR name) []:servername.domain.com Email Address []: |

Finally I have configured only three below parameters in jupyterhub_config.py configuration file:

c.JupyterHub.bind_url = 'https://servername.domain.com' c.JupyterHub.ssl_cert = 'root-ocsp.csr' c.JupyterHub.ssl_key = 'root-ocsp.key' c.Spawner.default_url = '/lab' # Unset to keep Jupyter Notebook |

Simply execute juyterhub command to run JupyterHub, of course creating a service that start with server boot is more than recommended.

Then accessing to https url you see login window without the HTTP warning (you will have to add the self signed certificate as a trusted server in your browser).

Jupyter kernels manual configuration

Connect with an existing OS account created onto server where JupyterHub is running:

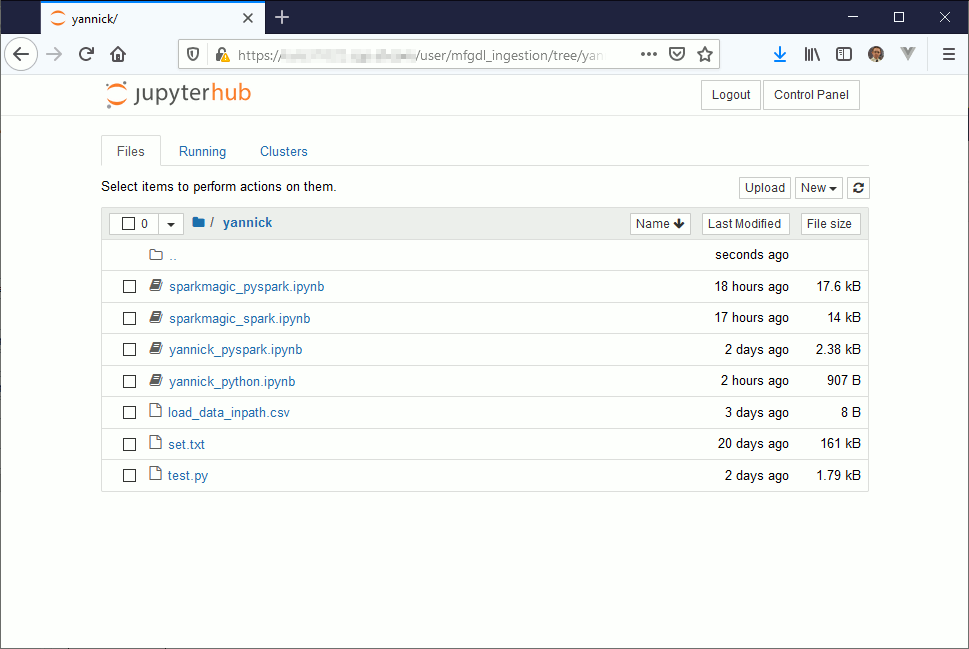

Create a new Python 3 notebook (yannick_python.ipynb in my example, but as you can see I have many others):

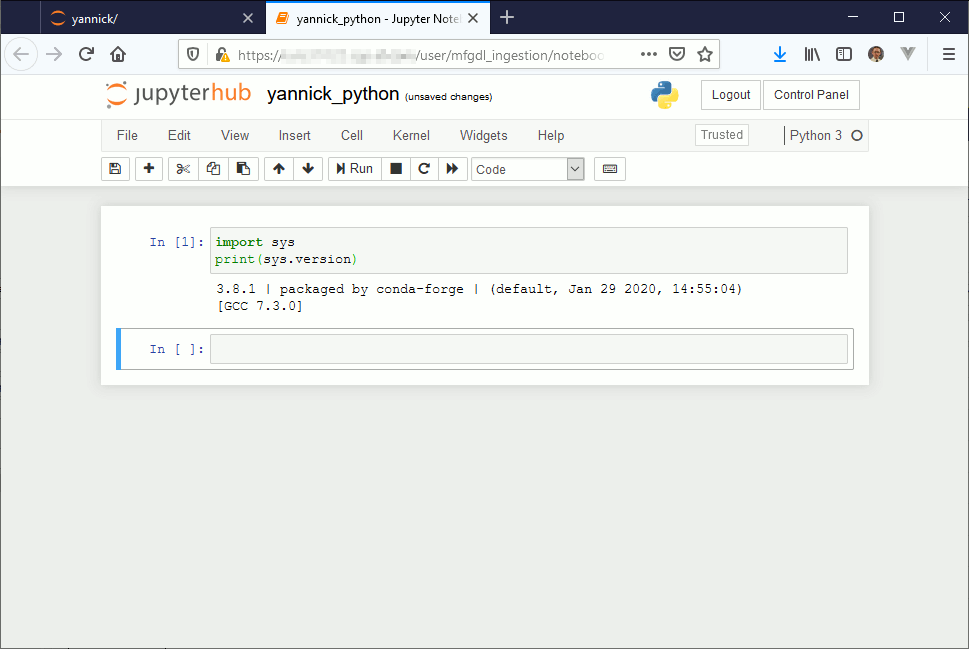

And this below dummy example should work:

Obvosuly our goal here is not to do Python but Spark. To manually create a Pyspark kernel create the kernel directory in home installation of your Jupyterhub:

[root@server ~]# cd /opt/anaconda3/envs/jupyterhub/share/jupyter/kernels (jupyterhub) [root@server kernels]# ll total 0 drwxr-xr-x 2 root root 69 Feb 18 14:20 python3 (jupyterhub) [root@server kernels]# mkdir pyspark_kernel |

Then create below kernel file:

[root@server pyspark]# cat kernel.json { "display_name": "PySpark_kernel", "language": "python", "argv": [ "/opt/anaconda3/bin/python", "-m", "ipykernel", "-f", "{connection_file}" ], "env": { "SPARK_HOME": "/usr/hdp/current/spark2-client/", "PYSPARK_PYTHON": "/opt/anaconda3/bin/python", "PYTHONPATH": "/usr/hdp/current/spark2-client/python/:/usr/hdp/current/spark2-client/python/lib/py4j-0.10.7-src.zip", "PYTHONSTARTUP": "/usr/hdp/current/spark2-client/python/pyspark/shell.py", "PYSPARK_SUBMIT_ARGS": "--master yarn --queue llap --jars /usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar --py-files /usr/hdp/current/hive_warehouse_connector/pyspark_hwc-1.0.0.3.1.4.0-315.zip pyspark-shell" } } |

You can confirm it is taken into account with:

(jupyterhub) [root@server ~]# jupyter-kernelspec list Available kernels: pyspark_kernel /opt/anaconda3/envs/jupyterhub/share/jupyter/kernels/pyspark_kernel python3 /opt/anaconda3/envs/jupyterhub/share/jupyter/kernels/python3 |

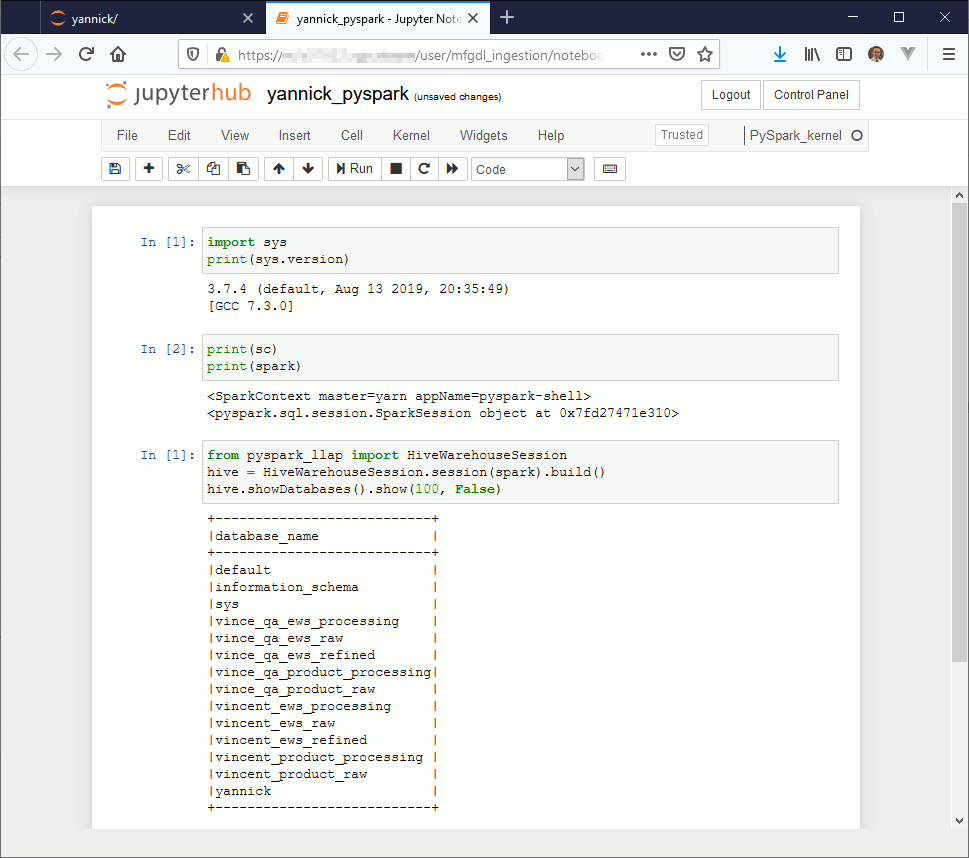

If you create a new Jupyter Notebook and choose PySpark_kernel you should be able to execute below sample code:

So far I have not yet found on how to create a Spark Scala manual kernel, any insight is welcome…

Sparkmagic Jupyter kernels configuration

On the list of Jupyter available kernel there is one (IMHO) that comes more often than the competition: Sparkmagic.

Install it with:

conda install -c conda-forge sparkmagic |

Once done install the Sparkmagic kernel with:

(jupyterhub) [root@server kernels]# cd /opt/anaconda3/envs/jupyterhub/lib/python3.8/site-packages/sparkmagic/kernels (jupyterhub) [root@server kernels]# ll total 28 -rw-rw-r-- 2 root root 46 Jan 23 14:36 __init__.py -rw-rw-r-- 2 root root 20719 Jan 23 14:36 kernelmagics.py drwxr-xr-x 2 root root 72 Feb 18 16:48 __pycache__ drwxr-xr-x 3 root root 104 Feb 18 16:48 pysparkkernel drwxr-xr-x 3 root root 102 Feb 18 16:48 sparkkernel drwxr-xr-x 3 root root 103 Feb 18 16:48 sparkrkernel drwxr-xr-x 3 root root 95 Feb 18 16:48 wrapperkernel (jupyterhub) [root@server kernels]# jupyter-kernelspec install sparkrkernel [InstallKernelSpec] Installed kernelspec sparkrkernel in /usr/local/share/jupyter/kernels/sparkrkernel (jupyterhub) [root@server kernels]# jupyter-kernelspec install sparkkernel [InstallKernelSpec] Installed kernelspec sparkkernel in /usr/local/share/jupyter/kernels/sparkkernel (jupyterhub) [root@server kernels]# jupyter-kernelspec install pysparkkernel [InstallKernelSpec] Installed kernelspec pysparkkernel in /usr/local/share/jupyter/kernels/pysparkkernel (jupyterhub) [root@server kernels]# jupyter-kernelspec list Available kernels: pysparkkernel /opt/anaconda3/envs/jupyterhub/share/jupyter/kernels/pysparkkernel python3 /opt/anaconda3/envs/jupyterhub/share/jupyter/kernels/python3 sparkkernel /opt/anaconda3/envs/jupyterhub/share/jupyter/kernels/sparkkernel sparkrkernel /opt/anaconda3/envs/jupyterhub/share/jupyter/kernels/sparkrkernel |

Enable the server extension with:

(jupyterhub) [root@server ~]# jupyter serverextension enable --py sparkmagic Enabling: sparkmagic - Writing config: /root/.jupyter - Validating... sparkmagic 0.15.0 OK |

In the home directory of the account with which you will connect to JupyterHub create a .sparkmagic directory and create a file that is a copy of provided config.json.

In this file modify at least for each kernel_xxx_credentials section the url to map your Livy server name and port:

"kernel_python_credentials" : {

"username": "",

"password": "",

"url": "http://livyserver.domain.com:8999",

"auth": "None"

}, |

And the part on which I have spent quite a lot of time the session_configs section as below (to add the HWC connector information):

"session_configs": {

"driverMemory": "1000M",

"executorCores": 2,

"conf": {"spark.jars": "file:///usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar",

"spark.submit.pyFiles": "file:///usr/hdp/current/hive_warehouse_connector/pyspark_hwc-1.0.0.3.1.4.0-315.zip"}

}, |

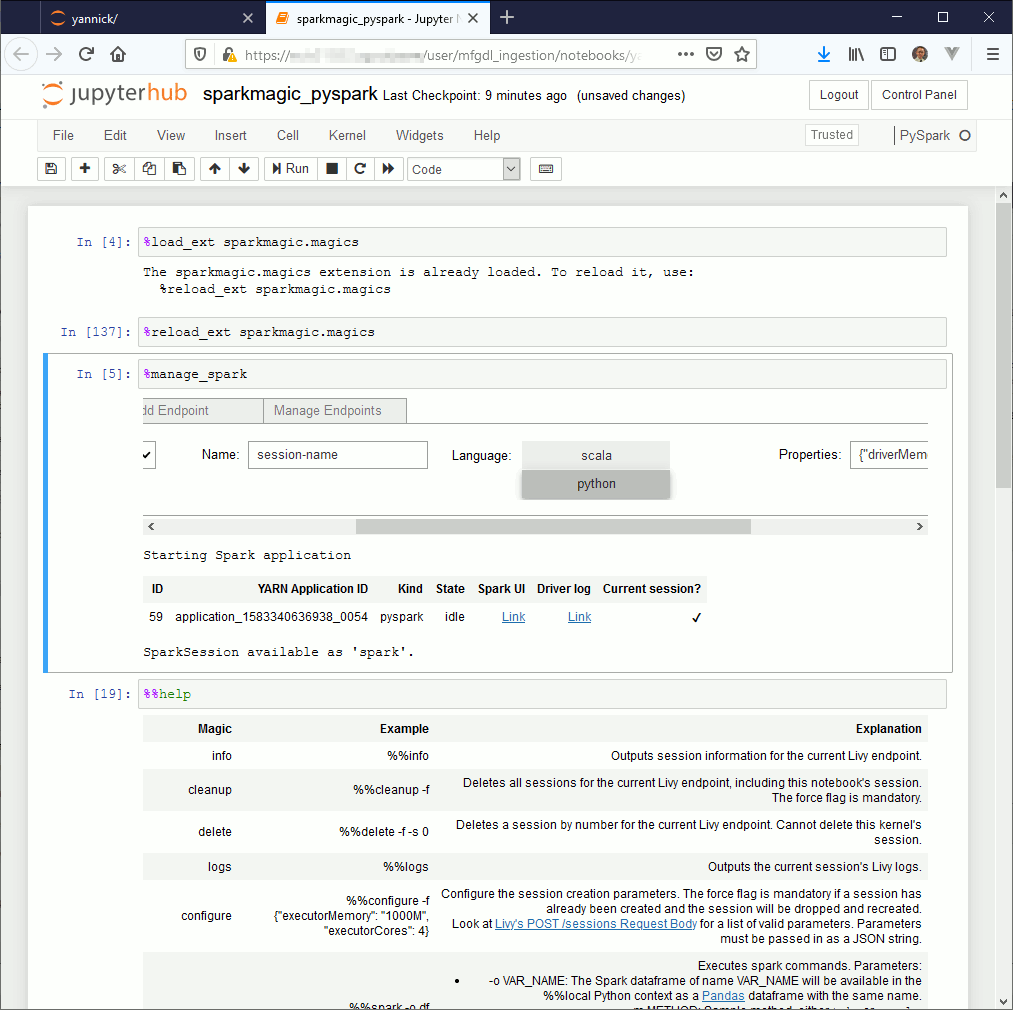

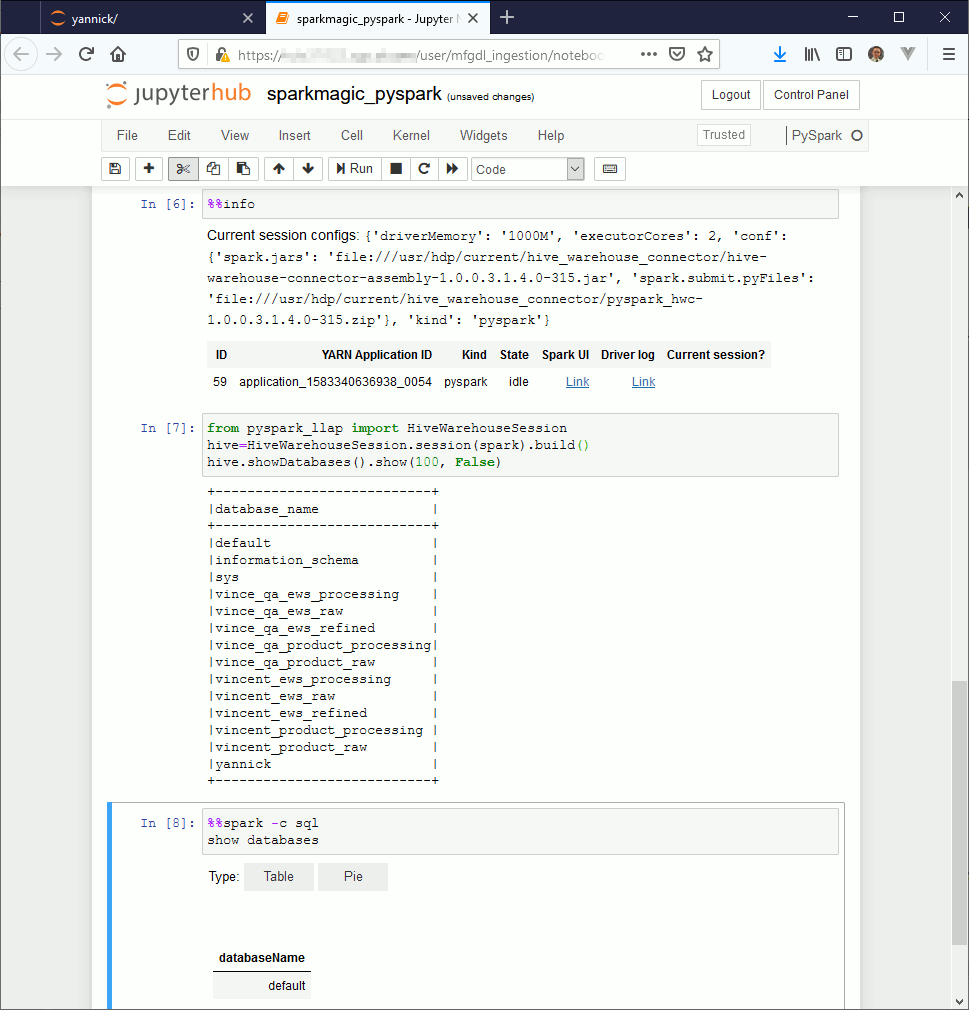

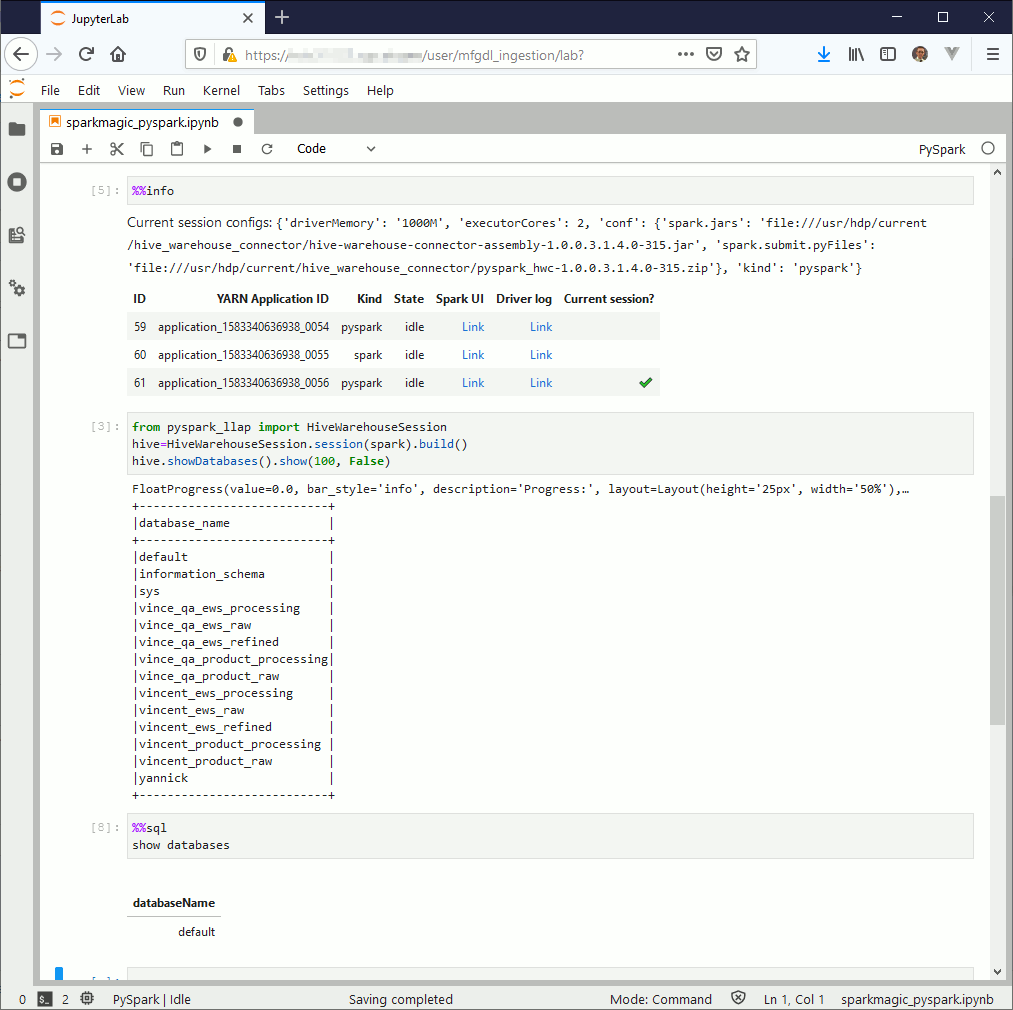

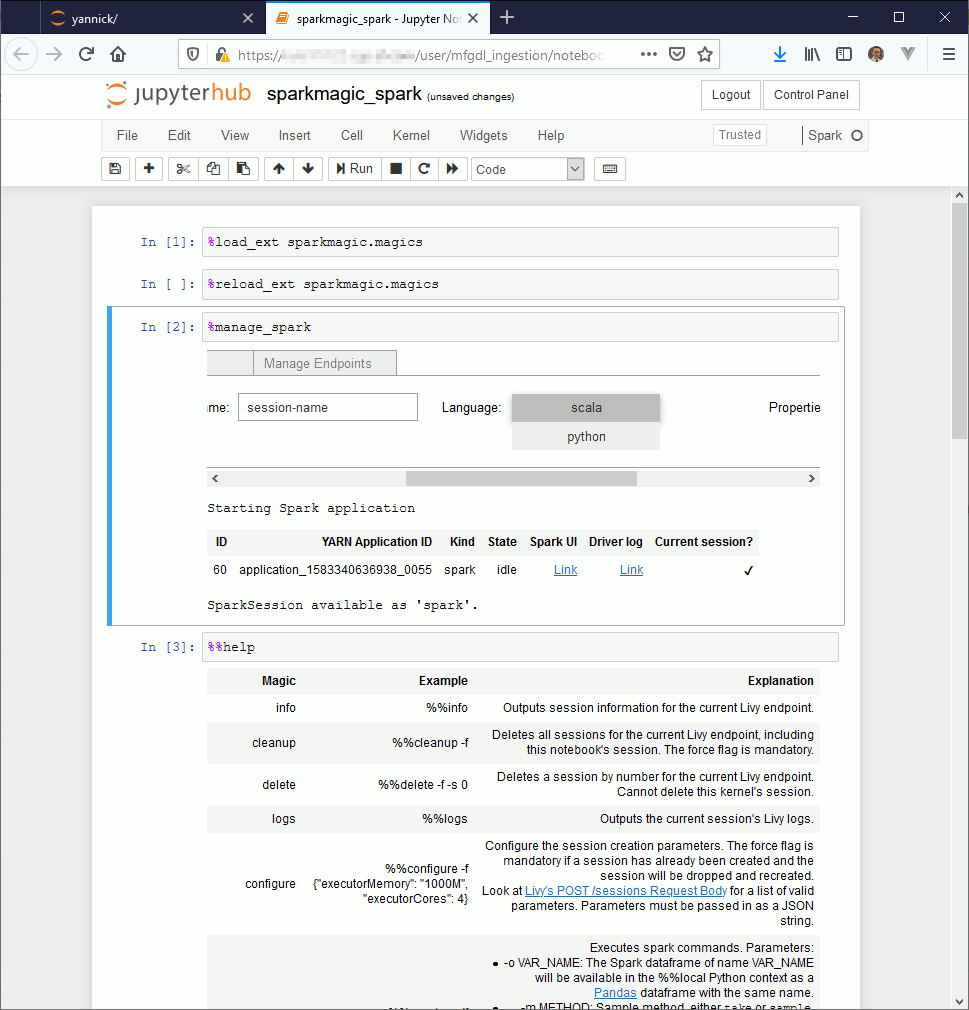

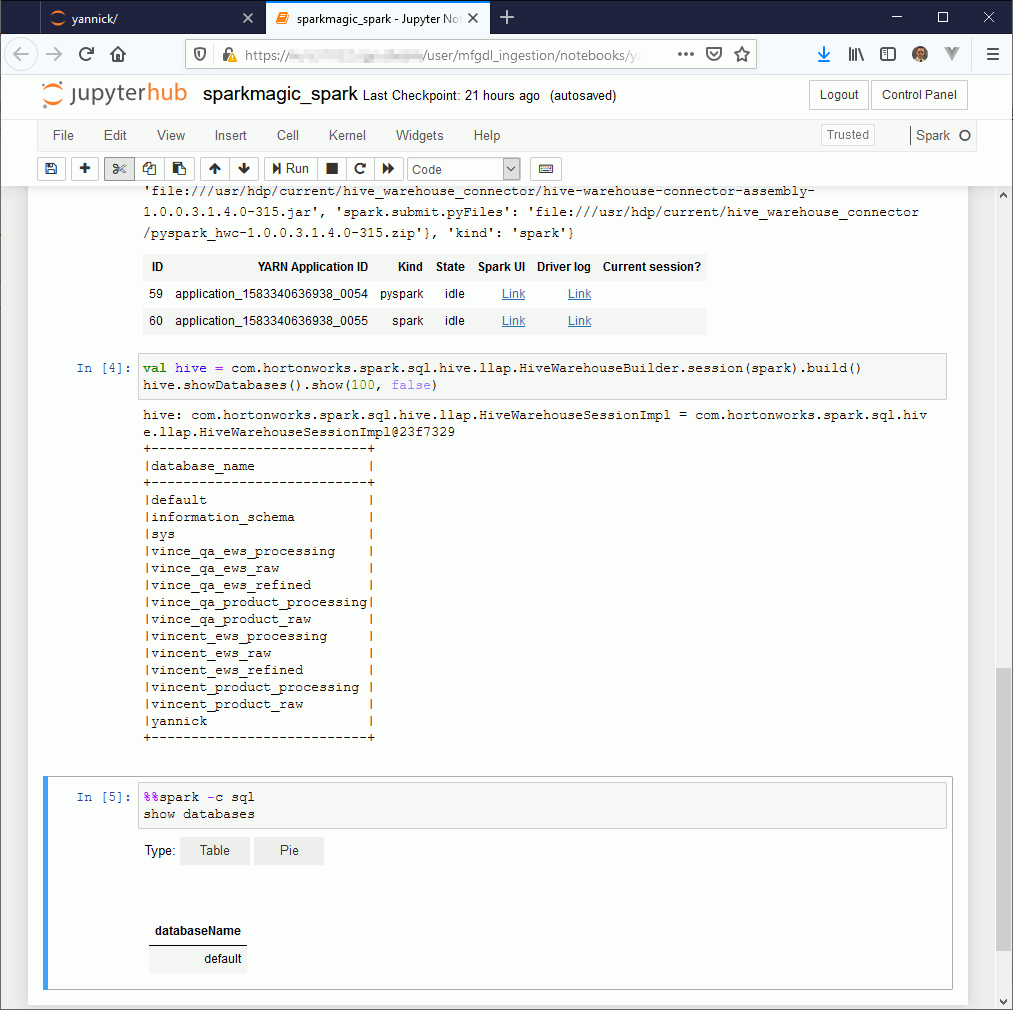

If you then create new notebook using PySpark or Spark whether you want to use Python or Scala you should be able to run the below exemples.

If you use Jupyter Notebook the first command to execute is magic command %load_ext sparkmagic.magics then create a session using magic command %manage_spark select either Scala or Python (remain the question of R language but I do not use it). If you use JupyterLab you can directly start to work as the %manage_spark command does not work. The Livy session should be automatically created while executing the first command, should also be the same with Jupyter Notebook but I had few issues with this so…

Few other magic commands are quite interesting:

- %%help to get list of available command

- %%info to see if your Livy session is still active (many issue can come from this)

PySpark exemple (the SQL part is still broken due to HWC):

Scala exemple (same story for SQL):

References

- JupyterHub installation

- Installation of Jupyterhub on remote server

- Jupyter-kernels

- Sparkmagic

- Fully Arm Your Spark with Ipython and Jupyter in Python 3

- Jupyter – SparkMagic

- Sparkmagic-on-HDP