Table of contents

Preamble

How to configure Hive Warehouse Connector (HWC) integration in Zeppelin Notebook ? Since we upgraded to Hortonworks Data Platform (HDP) 3.1.4 we have been obliged to fight everywhere to integrate the new way of working in Spark with HWC. The connector in itself is not very complex to use, it requires only a small modification of your Spark code (Scala or Python). What’s complex is its integration in the existing tools as well as how, now, to run Pyspark and Spark-shell (Scala) to integrate it in your environment.

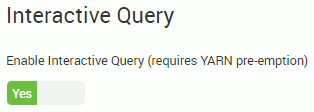

On top of this, as clearly stated in the official documentation, you need HWC and LLAP (Live Long And Process or Low-Latency Analytical processing) to read Hive managed tables from Spark. Which is one of the first operation you will do in most of your Spark scripts. So in Ambari activate and configure it (number of nodes, memory, concurrent queries):

HWC on the command line

Before jumping to Zeppelin let’s quickly see how you know execute Spark on the command line with HWC.

You should have already configured Spark below parameters (as well as reverting back hive.fetch.task.conversion value see my other article on this)

- spark.hadoop.hive.llap.daemon.service.hosts = @llap0

- spark.hadoop.hive.zookeeper.quorum = zookeeper01.domain.com:2181,zookeeper03.domain.com:2181,zookeeper03.domain.com:2181

- spark.sql.hive.hiveserver2.jdbc.url = jdbc:hive2://zookeeper01.domain.com:2181,zookeeper03.domain.com:2181,zookeeper03.domain.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2-interactive

To execute spark-shell you must now add below jar file (in the example I’m in YARN mode on llap YARN queue):

spark-shell --master yarn --queue llap --jars /usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar |

To test it’s working you might use the below test code:

val hive = com.hortonworks.spark.sql.hive.llap.HiveWarehouseBuilder.session(spark).build() hive.showDatabases().show(100, false) |

To execute pyspark you must add below jar file (I’m in YARN mode on llap YARN queue) and Python file:

pyspark --master yarn --queue llap --jars /usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar --py-files /usr/hdp/current/hive_warehouse_connector/pyspark_hwc-1.0.0.3.1.4.0-315.zip |

To test all is good you can use below script

from pyspark_llap import HiveWarehouseSession hive = HiveWarehouseSession.session(spark).build() hive.showDatabases().show(10, False) |

To use spark-submit you do exactly the same thing:

spark-submit --master yarn --queue llap --jars /usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar --py-files /usr/hdp/current/hive_warehouse_connector/pyspark_hwc-1.0.0.3.1.4.0-315.zip script_to_execute |

Zeppelin Spark2 interpreter configuration

To make Spark2 Zeppelin interpreter working with HWC you need to add two parameters to its configuration:

- spark.jars = /usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar

- spark.submit.pyFiles = /usr/hdp/current/hive_warehouse_connector/pyspark_hwc-1.0.0.3.1.4.0-315.zip

Note:

Notice the F in uppercase in spark.submit.pyFiles, I have lost half a day because some blog articles on internet have misspelled it.

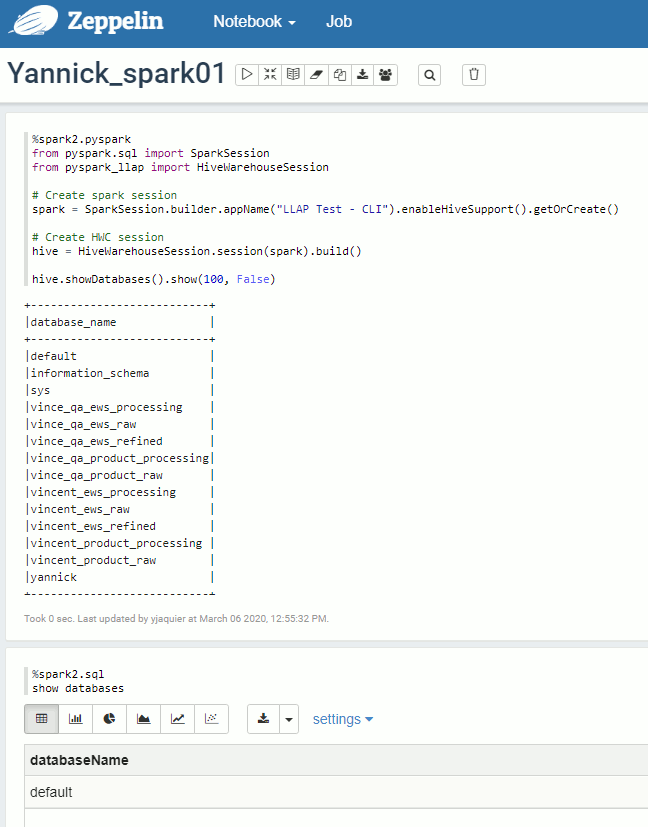

Save and restart the interpreter and it you test below code in a new Notebook you should get a result (and not the default databases name):

Remark:

As you can see above the %spark2.sql option of the interpreter is not configured to use HWC. So far I have not found how to overcome this. This could be an issue because this interpreter option is a nice feature to make graphs from direct SQL commands…

It works also well in Scala:

Zeppelin Livy2 interpreter configuration

You might wonder why configuring Livy2 interpreter as the first one is working fine. Well I had to configure it to for the JupyterHub Sparkmagic configuration that we will see in another blog post so here it is…

To make Livy2 Zeppelin interpreter working with HWC you need to add two parameters to its configuration:

- livy.spark.jars = file:///usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar

- livy.spark.submit.pyFiles = file:///usr/hdp/current/hive_warehouse_connector/pyspark_hwc-1.0.0.3.1.4.0-315.zip

Note:

The second parameter was just a good guess from the Spark2 interpreter setting. Also note the file:// to instruct interpreter to look on local file system and not on HDFS.

Also ensure the Livy url is well setup to the server (and port) of you Livy process:

- zeppelin.livy.url = http://livyserver.domain.com:8999

I have also faced this issue:

org.apache.zeppelin.livy.LivyException: Error with 400 StatusCode: "requirement failed: Local path /usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-1.0.0.3.1.4.0-315.jar cannot be added to user sessions." at org.apache.zeppelin.livy.BaseLivyInterpreter.callRestAPI(BaseLivyInterpreter.java:755) at org.apache.zeppelin.livy.BaseLivyInterpreter.createSession(BaseLivyInterpreter.java:337) at org.apache.zeppelin.livy.BaseLivyInterpreter.initLivySession(BaseLivyInterpreter.java:209) at org.apache.zeppelin.livy.LivySharedInterpreter.open(LivySharedInterpreter.java:59) at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:69) at org.apache.zeppelin.livy.BaseLivyInterpreter.getLivySharedInterpreter(BaseLivyInterpreter.java:190) at org.apache.zeppelin.livy.BaseLivyInterpreter.open(BaseLivyInterpreter.java:163) at org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:69) at org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:617) at org.apache.zeppelin.scheduler.Job.run(Job.java:188) at org.apache.zeppelin.scheduler.FIFOScheduler$1.run(FIFOScheduler.java:140) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) |

To solve it add below configuration to your Livy2 server (Custom livy2-conf in Ambari):

- livy.file.local-dir-whitelist = /usr/hdp/current/hive_warehouse_connector/

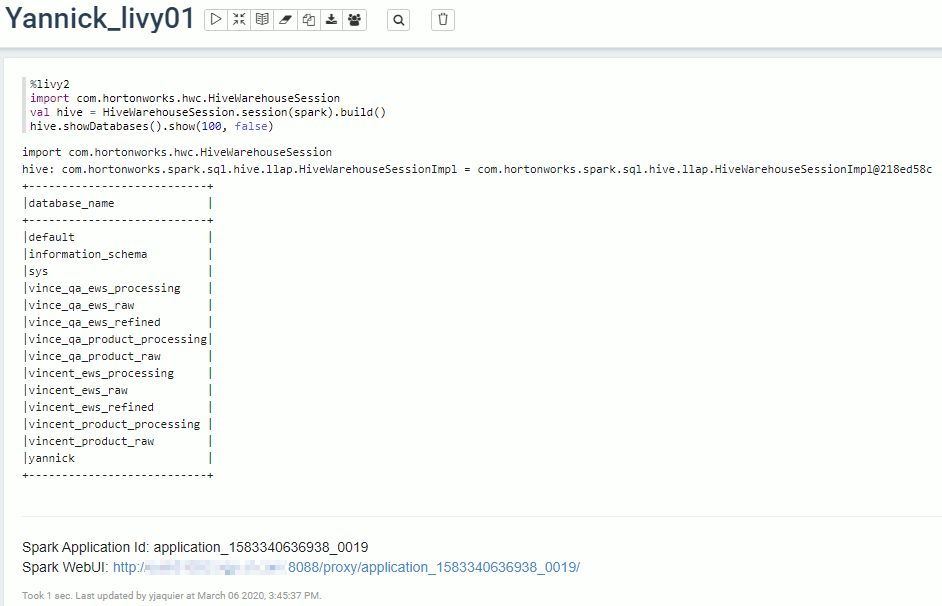

Working in Scala:

In Python:

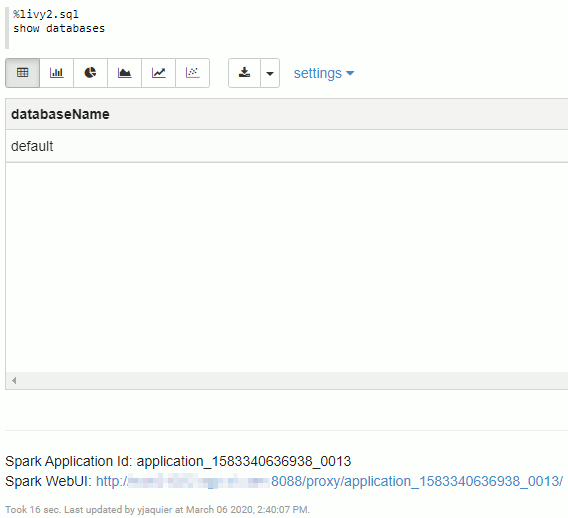

But same as for Spark2 interpreter the %livy2.sql option of the interpreter is broken for exact same reason:

References

- Apache Spark-Apache Hive connection configuration

- HiveWarehouseConnector

- How to Submit Spark Application through Livy REST API

- Invoke Livy with pyFiles attribute

- Spark HWC integration – HDP 3 Secure cluster