Table of contents

Preamble

In one of our processes we are daily overwriting a table (a partition of this table to be accurate) and, by good luck, we noticed the table size kept increasing till reaching a size that was bigger than her sibling history one !! We did a quick check on HDFS and saw that old files have not been deleted…

I have been able to reproduce the issue in a simple example and I think I have found the opened bug for this… This looks pretty amazing to find such bug as I feel Hadoop has reach a good maturity level…

We are running Hortonworks Data Platform (HDP) 3.1.4. So Hive release is 3.1.0.

INSERT OVERWRITE test case

I have below creation script (the database name is yannick):

DROP TABLE yannick.test01 purge; CREATE TABLE yannick.test01(val int, descr string) partitioned BY (fab string, lot_partition string) stored AS orc; INSERT INTO TABLE yannick.test01 PARTITION(fab='GVA', lot_partition='TEST') VALUES(1, 'One'); |

Initially from HDFS standpoint things are crystal clear:

[hdfs@client ~]$ hdfs dfs -ls /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/ Found 1 items drwxrwx---+ - hive hadoop 0 2020-04-06 14:55 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/delta_0000001_0000001_0000 [hdfs@client ~]$ hdfs dfs -ls /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/* Found 2 items -rw-rw----+ 3 hive hadoop 1 2020-04-06 14:55 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/delta_0000001_0000001_0000/_orc_acid_version -rw-rw----+ 3 hive hadoop 696 2020-04-06 14:55 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/delta_0000001_0000001_0000/bucket_00000 |

So one directory with one ORC file.

I have then tried to know from Hive standpoint which directory(ie) is used by this table. I initially tried directly querying our Hive metastore (MySQL):

mysql> select TBLS.TBL_NAME, PARTITIONS.PART_NAME, SDS.LOCATION -> from SDS, TBLS, PARTITIONS, DBS -> where TBLS.TBL_NAME='test01' -> and DBS.NAME = 'yannick' -> and TBLS.DB_ID = DBS.DB_ID -> and PARTITIONS.SD_ID = SDS.SD_ID -> and TBLS.TBL_ID = PARTITIONS.TBL_ID -> order by 1,2; +----------+----------------------------+------------------------------------------------------------------------------------------------------------------+ | TBL_NAME | PART_NAME | LOCATION | +----------+----------------------------+------------------------------------------------------------------------------------------------------------------+ | test01 | fab=GVA/lot_partition=TEST | hdfs://namenode01.domain.com:8020/warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST | +----------+----------------------------+------------------------------------------------------------------------------------------------------------------+ 1 row in set (0.00 sec) |

But only the root folder is given and I have not been able to find in Hive metastore a table displaying this level of detail. The solution is simply coming from Hive virtual columns:

0: jdbc:hive2://zookeeper01.domain.com:2181,zoo> SELECT input__file__name FROM yannick.test01 WHERE fab="GVA" AND lot_partition="TEST"; +----------------------------------------------------+ | input__file__name | +----------------------------------------------------+ | hdfs://namenode01.domain.com:8020/warehouse/TABLESPACE/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/delta_0000001_0000001_0000/bucket_00000 | +----------------------------------------------------+ 1 ROW selected (0.431 seconds) |

INSERT OVERWRITE does not delete old directories

If I INSERT OVERWRITE in this table in same exact partition I’m expecting Hive to do HDFS cleaning automatically and I surely not expect to have old folder kept forever. Unfortunately this is what happens if I insert overwrite in same partition:

INSERT overwrite TABLE yannick.test01 PARTITION(fab='GVA', lot_partition='TEST') VALUES(2,'Two'); |

If I select used ORC files I get, as expected:

0: jdbc:hive2://zookeeper01.domain.com:2181,zoo> SELECT input__file__name FROM yannick.test01 WHERE fab="GVA" AND lot_partition="TEST"; INFO : Compiling command(queryId=hive_20200406150529_c05aac38-6933-4a8e-b7ec-6ae9016e67f0): SELECT input__file__name FROM yannick.test01 WHERE fab="GVA" AND lot_partition="TEST" INFO : Semantic Analysis Completed (retrial = FALSE) INFO : RETURNING Hive SCHEMA: SCHEMA(fieldSchemas:[FieldSchema(name:input__file__name, TYPE:string, COMMENT:NULL)], properties:NULL) INFO : Completed compiling command(queryId=hive_20200406150529_c05aac38-6933-4a8e-b7ec-6ae9016e67f0); TIME taken: 0.114 seconds INFO : Executing command(queryId=hive_20200406150529_c05aac38-6933-4a8e-b7ec-6ae9016e67f0): SELECT input__file__name FROM yannick.test01 WHERE fab="GVA" AND lot_partition="TEST" INFO : Completed executing command(queryId=hive_20200406150529_c05aac38-6933-4a8e-b7ec-6ae9016e67f0); TIME taken: 0.002 seconds INFO : OK +----------------------------------------------------+ | input__file__name | +----------------------------------------------------+ | hdfs://namenode01.domain.com:8020/warehouse/TABLESPACE/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/base_0000002/bucket_00000 | +----------------------------------------------------+ 1 ROW selected (0.211 seconds) |

But if you look at HDFS level:

[hdfs@client ~]$ hdfs dfs -ls /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/ Found 2 items drwxrwx---+ - hive hadoop 0 2020-04-06 15:04 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/base_0000002 drwxrwx---+ - hive hadoop 0 2020-04-06 14:55 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/delta_0000001_0000001_0000 [hdfs@client ~]$ hdfs dfs -ls /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/* Found 2 items -rw-rw----+ 3 hive hadoop 1 2020-04-06 15:04 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/base_0000002/_orc_acid_version -rw-rw----+ 3 hive hadoop 696 2020-04-06 15:04 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/base_0000002/bucket_00000 Found 2 items -rw-rw----+ 3 hive hadoop 1 2020-04-06 14:55 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/delta_0000001_0000001_0000/_orc_acid_version -rw-rw----+ 3 hive hadoop 696 2020-04-06 14:55 /warehouse/tablespace/managed/hive/yannick.db/test01/fab=GVA/lot_partition=TEST/delta_0000001_0000001_0000/bucket_00000 |

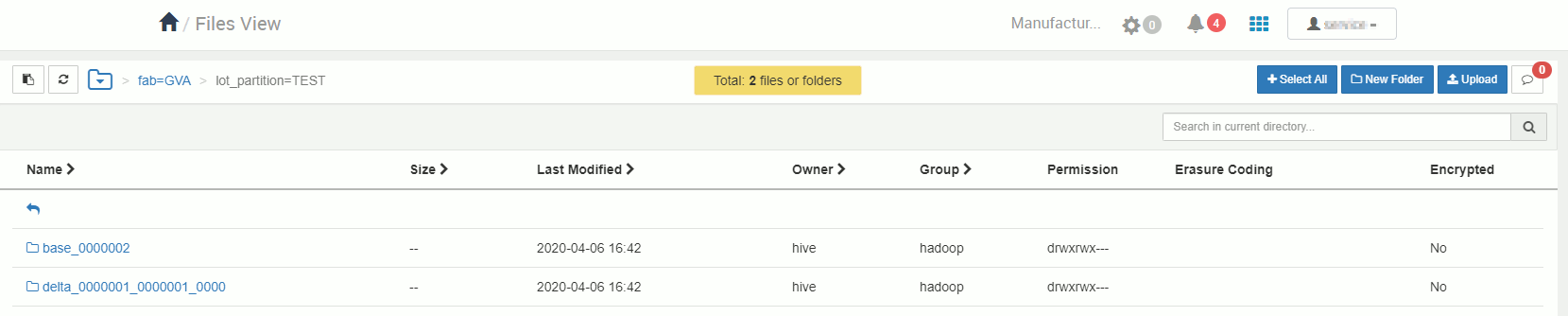

This can also be done with the File View interface in Ambari:

The old former directory has not been deleted and this happen as often as you insert overwrite…

We have also tried to play with auto.purge table property:

As of Hive 2.3.0 (HIVE-15880), if the table has TBLPROPERTIES (“auto.purge”=”true”) the previous data of the table is not moved to Trash when INSERT OVERWRITE query is run against the table. This functionality is applicable only for managed tables (see managed tables) and is turned off when “auto.purge” property is unset or set to false.

For example:

0: jdbc:hive2://zookeeper01.domain.com:2181,zoo> ALTER TABLE yannick.test01 SET TBLPROPERTIES ("auto.purge" = "true"); No ROWS affected (0.174 seconds) 0: jdbc:hive2://zookeeper01.domain.com:2181,zoo> describe formatted yannick.test01; +-------------------------------+----------------------------------------------------+-----------------------------+ | col_name | data_type | COMMENT | +-------------------------------+----------------------------------------------------+-----------------------------+ | # col_name | data_type | COMMENT | | val | int | | | descr | string | | | | NULL | NULL | | # PARTITION Information | NULL | NULL | | # col_name | data_type | COMMENT | | fab | string | | | lot_partition | string | | | | NULL | NULL | | # Detailed TABLE Information | NULL | NULL | | DATABASE: | yannick | NULL | | OwnerType: | USER | NULL | | Owner: | hive | NULL | | CreateTime: | Mon Apr 06 14:55:48 CEST 2020 | NULL | | LastAccessTime: | UNKNOWN | NULL | | Retention: | 0 | NULL | | Location: | hdfs://namenode01.domain.com:8020/warehouse/TABLESPACE/managed/hive/yannick.db/test01 | NULL | | TABLE TYPE: | MANAGED_TABLE | NULL | | TABLE Parameters: | NULL | NULL | | | COLUMN_STATS_ACCURATE | {\"BASIC_STATS\":\"true\"} | | | auto.purge | true | | | bucketing_version | 2 | | | last_modified_by | hive | | | last_modified_time | 1586180747 | | | numFiles | 0 | | | numPartitions | 0 | | | numRows | 0 | | | rawDataSize | 0 | | | totalSize | 0 | | | transactional | true | | | transactional_properties | default | | | transient_lastDdlTime | 1586180747 | | | NULL | NULL | | # Storage Information | NULL | NULL | | SerDe Library: | org.apache.hadoop.hive.ql.io.orc.OrcSerde | NULL | | InputFormat: | org.apache.hadoop.hive.ql.io.orc.OrcInputFormat | NULL | | OutputFormat: | org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat | NULL | | Compressed: | No | NULL | | Num Buckets: | -1 | NULL | | Bucket Columns: | [] | NULL | | Sort Columns: | [] | NULL | | Storage Desc Params: | NULL | NULL | | | serialization.format | 1 | +-------------------------------+----------------------------------------------------+-----------------------------+ 43 rows selected (0.172 seconds) |

But here the problem is not being able to recover figures from Trash in case of an human error because previous figures are simply not deleted…

Workaround we have found but we are sad not being able to rely on this basic thing:

- TRUNCATE TABLE yannick.test01 PARTITION(fab=”GVA”, lot_partition=”TEST”);

- ALTER TABLE yannick.test01 drop PARTITION(fab=”GVA”, lot_partition=”TEST”);

- Deleteing manually the old forlder works but this is quite dangerous and not natural at all to do so…

References

- HIVE “INSERT OVERWRITE” DOES NOT REMOVE EXISTING DATA

- How to know location about partition in hive?

- How to list table or partition location from Hive Metastore

- Useful queries for the Hive metastore

- Metastore ER Diagram