Table of contents

Preamble

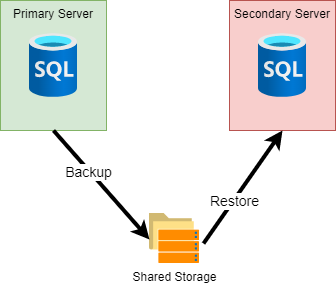

Log shipping (LS) is a proven legacy, the oldest, high availability (HA) feature based on backup and restore in SQL Server. Recovery Point Objective (RPO) and Recovery Time Objective (RTO) are more flexible and if your database is not highly critical then you might consider this HA option as well.

Log shipping can be combined with Availability Groups or Failover Cluster instances but remains the most simple to implement in places where you might have budget or skills issues.

Based on SQL Server native backup transaction log are sent from the primary server to secondary server where they are applied. SQL Server Agent manage the automatic flow of those transaction logs:

Microsoft claim that one of biggest advantage of LS is the capability to delay log apply and be able to react in case of human error before the mistake is applied to secondary database. They also claim that LS is also more tolerant to slow network.

In a LS architecture you can mix Linux and Windows hosts. For my trial I have chosen to use two virtual machines running Oracle Linux Server release 8.5. SQL Server is release 2019 and exactly 15.0.4188:

- server1.domain.com with IP address 192.168.56.101, my primary server

- server2.domain.com with IP address 192.168.56.102, my secondary server

To share storage between my two servers I have decided to follow official documentation and try Samba for the first time with the objective to lean something.

Samba installation and configuration

On primary server

[root@server1 ~]# dnf -y install samba |

[root@server1 ~]# mkdir /mssql/tlogs [root@server1 ~]# chown mssql:mssql /mssql/tlogs |

[root@server1 ~]# tail -n 8 /etc/samba/smb.conf [tlogs] comment = Transation logs directory for Log Shipping path=/mssql/tlogs available=yes read only=yes browsable=yes public=yes writable=no [root@server1 ~]# testparm Load smb config files from /etc/samba/smb.conf Loaded services file OK. Weak crypto is allowed Server role: ROLE_STANDALONE Press enter to see a dump of your service definitions |

[root@server1 ~]# smbpasswd -a mssql New SMB password: Retype new SMB password: Added user mssql. |

[root@server1 ~]# systemctl restart smb nmb |

On secondary server

[root@server2 ~]# smbclient --user=mssql -L //192.168.56.101 Enter SAMBA\mssql's password: Sharename Type Comment --------- ---- ------- print$ Disk Printer Drivers tlogs Disk Transation logs directory for Log Shipping IPC$ IPC IPC Service (Samba 4.14.5) mssql Disk Home Directories SMB1 disabled -- no workgroup available |

[root@server2 ~]# dnf -y install cifs-utils |

[root@server2 ~]# mkdir -p /mssql/tlogs [root@server2 ~]# vi ~mssql/.tlogscreds [root@server2 ~]# cat ~mssql/.tlogscreds username=mssql password=secure_password domain=workgroup [root@server2 ~]# chmod 660 ~mssql/.tlogscreds [root@server2 ~]# ll ~mssql/.tlogscreds -rw-rw---- 1 root root 52 Dec 16 17:14 /var/opt/mssql/.tlogscreds |

[root@server2 ~]# grep cifs /etc/fstab //192.168.56.101/tlogs /mssql/tlogs cifs credentials=/var/opt/mssql/.tlogcreds,ro,uid=mssql,gid=mssql 0 0 [root@server2 ~]# mount -a [root@server2 ~]# df -h /mssql/tlogs Filesystem Size Used Avail Use% Mounted on //192.168.56.101/tlogs 1014M 123M 892M 13% /mssql/tlogs |

You can create a test file on your primary server in /mssql/tlogs and you can access it in read only mode in secondary server: magical !

Log shipping configuration

Enable SQL Server Agent (for SQL Server 2019 and SQL Server 2017 CU4 and higher no package to install, just a configuration) on all servers:

[root@server1 mssql]# /opt/mssql/bin/mssql-conf set sqlagent.enabled true SQL Server needs to be restarted in order to apply this setting. Please run 'systemctl restart mssql-server.service'. [root@server1 mssql]# systemctl restart mssql-server |

On primary server

Create a test database with a test table:

1> create database testdb 2> go 1> use testdb 2> create table test01(id int, descr varchar(20)) 3> insert into test01 values(1,'One') 4> go Changed database context to 'testdb'. (1 rows affected) |

And backup it in LS shared directory:

1> backup database testdb to disk = '/mssql/tlogs/testdb.bak' 2> go Processed 328 pages for database 'testdb', file 'testdb' on file 1. Processed 2 pages for database 'testdb', file 'testdb_log' on file 1. BACKUP DATABASE successfully processed 330 pages in 0.080 seconds (32.177 MB/sec). |

I was then expecting a not so complex command but I was wrong. The script is provided by Microsoft directly and I have just customized it for my environment. The script is roughly based on sp_add_log_shipping_primary_database. It create jobs to handle the process (msdb.dbo.sysjobs_view and msdb.dbo.sp_help_job procedure to see them):

DECLARE @LS_BackupJobId AS uniqueidentifier DECLARE @LS_PrimaryId AS uniqueidentifier DECLARE @SP_Add_RetCode As int EXECUTE @SP_Add_RetCode = master.dbo.sp_add_log_shipping_primary_database @database = N'testdb' ,@backup_directory = N'/mssql/tlogs' ,@backup_share = N'/mssql/tlogs' ,@backup_job_name = N'LSBackup_testdb' ,@backup_retention_period = 4320 ,@backup_compression = 2 ,@backup_threshold = 60 ,@threshold_alert_enabled = 1 ,@history_retention_period = 5760 ,@backup_job_id = @LS_BackupJobId OUTPUT ,@primary_id = @LS_PrimaryId OUTPUT ,@overwrite = 1 IF (@@ERROR = 0 AND @SP_Add_RetCode = 0) BEGIN DECLARE @LS_BackUpScheduleUID As uniqueidentifier DECLARE @LS_BackUpScheduleID AS int EXECUTE msdb.dbo.sp_add_schedule @schedule_name =N'LSBackupSchedule' ,@enabled = 1 ,@freq_type = 4 ,@freq_interval = 1 ,@freq_subday_type = 4 ,@freq_subday_interval = 15 ,@freq_recurrence_factor = 0 ,@active_start_date = 20170418 ,@active_end_date = 99991231 ,@active_start_time = 0 ,@active_end_time = 235900 ,@schedule_uid = @LS_BackUpScheduleUID OUTPUT ,@schedule_id = @LS_BackUpScheduleID OUTPUT EXECUTE msdb.dbo.sp_attach_schedule @job_id = @LS_BackupJobId ,@schedule_id = @LS_BackUpScheduleID EXECUTE msdb.dbo.sp_update_job @job_id = @LS_BackupJobId ,@enabled = 1 END EXECUTE master.dbo.sp_add_log_shipping_alert_job EXECUTE master.dbo.sp_add_log_shipping_primary_secondary @primary_database = N'testdb' ,@secondary_server = N'192.168.56.102' ,@secondary_database = N'testdb' ,@overwrite = 1 |

On secondary server

As you have access to the shared folder start by restoring the database you plan to handle with LS:

1> restore database testdb from disk = '/mssql/tlogs/testdb.bak' with norecovery 2> go Processed 328 pages for database 'testdb', file 'testdb' on file 1. Processed 2 pages for database 'testdb', file 'testdb_log' on file 1. RESTORE DATABASE successfully processed 330 pages in 0.101 seconds (25.487 MB/sec). |

Same as for primary database the script is provided by Microsoft and is not so easy to follow. The procedure in case of the secondary is sp_add_log_shipping_secondary_primary. It create jobs to handle the process (msdb.dbo.sysjobs_view and msdb.dbo.sp_help_job procedure to see them):

DECLARE @LS_Secondary__CopyJobId AS uniqueidentifier DECLARE @LS_Secondary__RestoreJobId AS uniqueidentifier DECLARE @LS_Secondary__SecondaryId AS uniqueidentifier DECLARE @LS_Add_RetCode As int EXECUTE @LS_Add_RetCode = master.dbo.sp_add_log_shipping_secondary_primary @primary_server = N'192.168.56.101' ,@primary_database = N'testdb' ,@backup_source_directory = N'/mssql/tlogs/' ,@backup_destination_directory = N'/mssql/tlogs/' ,@copy_job_name = N'LSCopy_testdb' ,@restore_job_name = N'LSRestore_testdb' ,@file_retention_period = 4320 ,@overwrite = 1 ,@copy_job_id = @LS_Secondary__CopyJobId OUTPUT ,@restore_job_id = @LS_Secondary__RestoreJobId OUTPUT ,@secondary_id = @LS_Secondary__SecondaryId OUTPUT IF (@@ERROR = 0 AND @LS_Add_RetCode = 0) BEGIN DECLARE @LS_SecondaryCopyJobScheduleUID As uniqueidentifier DECLARE @LS_SecondaryCopyJobScheduleID AS int EXECUTE msdb.dbo.sp_add_schedule @schedule_name =N'DefaultCopyJobSchedule' ,@enabled = 1 ,@freq_type = 4 ,@freq_interval = 1 ,@freq_subday_type = 4 ,@freq_subday_interval = 15 ,@freq_recurrence_factor = 0 ,@active_start_date = 20170418 ,@active_end_date = 99991231 ,@active_start_time = 0 ,@active_end_time = 235900 ,@schedule_uid = @LS_SecondaryCopyJobScheduleUID OUTPUT ,@schedule_id = @LS_SecondaryCopyJobScheduleID OUTPUT EXECUTE msdb.dbo.sp_attach_schedule @job_id = @LS_Secondary__CopyJobId ,@schedule_id = @LS_SecondaryCopyJobScheduleID DECLARE @LS_SecondaryRestoreJobScheduleUID As uniqueidentifier DECLARE @LS_SecondaryRestoreJobScheduleID AS int EXECUTE msdb.dbo.sp_add_schedule @schedule_name =N'DefaultRestoreJobSchedule' ,@enabled = 1 ,@freq_type = 4 ,@freq_interval = 1 ,@freq_subday_type = 4 ,@freq_subday_interval = 15 ,@freq_recurrence_factor = 0 ,@active_start_date = 20170418 ,@active_end_date = 99991231 ,@active_start_time = 0 ,@active_end_time = 235900 ,@schedule_uid = @LS_SecondaryRestoreJobScheduleUID OUTPUT ,@schedule_id = @LS_SecondaryRestoreJobScheduleID OUTPUT EXECUTE msdb.dbo.sp_attach_schedule @job_id = @LS_Secondary__RestoreJobId ,@schedule_id = @LS_SecondaryRestoreJobScheduleID END DECLARE @LS_Add_RetCode2 As int IF (@@ERROR = 0 AND @LS_Add_RetCode = 0) BEGIN EXECUTE @LS_Add_RetCode2 = master.dbo.sp_add_log_shipping_secondary_database @secondary_database = N'testdb' ,@primary_server = N'192.168.56.101' ,@primary_database = N'testdb' ,@restore_delay = 0 ,@restore_mode = 0 ,@disconnect_users = 0 ,@restore_threshold = 45 ,@threshold_alert_enabled = 1 ,@history_retention_period = 5760 ,@overwrite = 1 END IF (@@error = 0 AND @LS_Add_RetCode = 0) BEGIN EXECUTE msdb.dbo.sp_update_job @job_id = @LS_Secondary__CopyJobId ,@enabled = 1 EXECUTE msdb.dbo.sp_update_job @job_id = @LS_Secondary__RestoreJobId ,@enabled = 1 END |

Start the log shipping configuration

On primary server start the log shipping job:

1> use msdb 2> execute dbo.sp_start_job N'LSBackup_testdb' 3> go Changed database context to 'msdb'. Job 'LSBackup_testdb' started successfully. |

On seconday server start the log shipping job:

1> USE msdb 2> execute dbo.sp_start_job N'LSCopy_testdb' 3> execute dbo.sp_start_job N'LSRestore_testdb' 4> go Changed database context to 'msdb'. Job 'LSCopy_testdb' started successfully. Job 'LSRestore_testdb' started successfully. |

Log shipping failover testing

The log shipped database is not accessible, even in read only mode:

1> use testdb 2> go Msg 927, Level 14, State 2, Server server2, Line 1 Database 'testdb' cannot be opened. It is in the middle of a restore. |

To access it you must break the log shipping process. To check if my test table rows are replicated I insert a new row:

1> insert into test01 values(2,'Two'); 2> select * from test01 3> go (1 rows affected) id descr ----------- -------------------- 1 One 2 Two |

Break the LS process on secondary server with:

1> restore database testdb with recovery 2> go RESTORE DATABASE successfully processed 0 pages in 0.441 seconds (0.000 MB/sec). |

Then you can access the database and confirm all has been replicated:

1> use testdb 2> select * from test01 3> go Changed database context to 'testdb'. id descr ----------- -------------------- 1 One 2 Two (2 rows affected) |

If I’m not wrong to recover LS situation you have to restart from a full backup of the original database. Which might be an issue if your primary is really big…

On primary server make a full database backup:

1> backup database testdb to disk = '/mssql/tlogs/testdb.bak' 2> go Processed 328 pages for database 'testdb', file 'testdb' on file 3. Processed 2 pages for database 'testdb', file 'testdb_log' on file 3. BACKUP DATABASE successfully processed 330 pages in 0.065 seconds (39.603 MB/sec). |

Restore this backup on secondary server with replace option to overwrite the existing database:

1> restore database testdb from disk = '/mssql/tlogs/testdb.bak' with norecovery 2> go Msg 3159, Level 16, State 1, Server server2, Line 1 The tail of the log for the database "testdb" has not been backed up. Use BACKUP LOG WITH NORECOVERY to backup the log if it contains work you do not want to lose. Use the WITH REPLACE or WITH STOPAT clause of the RESTORE statement to just overwrite the contents of the log. Msg 3013, Level 16, State 1, Server server2, Line 1 RESTORE DATABASE is terminating abnormally. 1> restore database testdb from disk = '/mssql/tlogs/testdb.bak' with norecovery, replace 2> go Processed 328 pages for database 'testdb', file 'testdb' on file 1. Processed 2 pages for database 'testdb', file 'testdb_log' on file 1. RESTORE DATABASE successfully processed 330 pages in 0.065 seconds (39.603 MB/sec). |

On primary restart the LS job:

1> exec msdb.dbo.sp_start_job N'LSBackup_testdb' 2> go Job 'LSBackup_testdb' started successfully. |

On secondary restart the two LS jobs:

1> exec msdb.dbo.sp_start_job N'LSCopy_testdb' 2> exec msdb.dbo.sp_start_job N'LSRestore_testdb' 3> go Job 'LSCopy_testdb' started successfully. Job 'LSRestore_testdb' started successfully. |

And you are back to original situation…

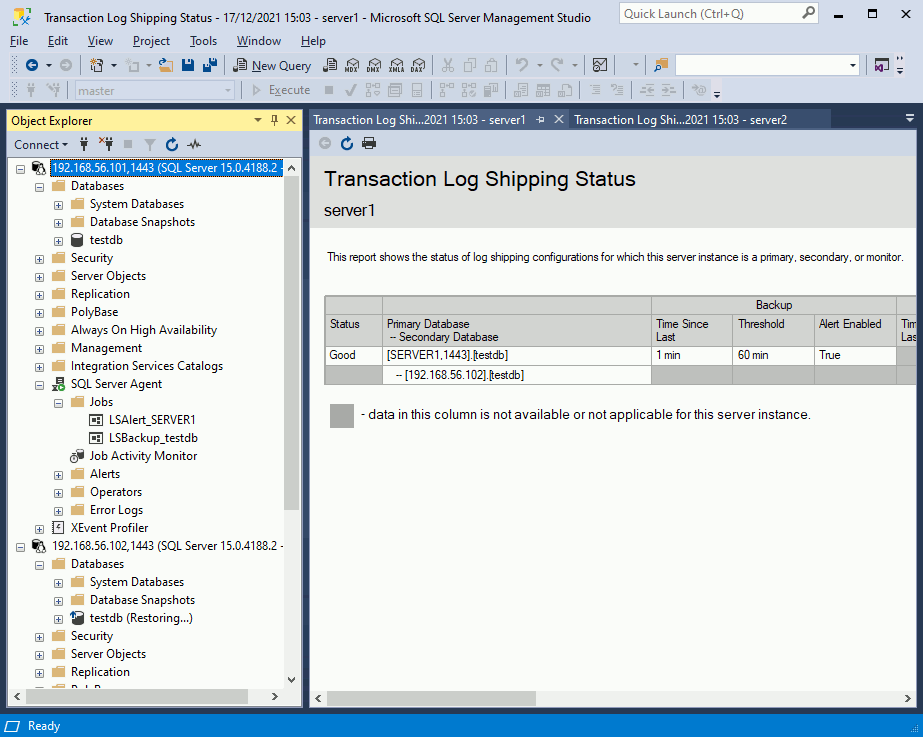

Log shipping monitoring

You have plenty of views and procedures (https://docs.microsoft.com/en-us/sql/database-engine/log-shipping/monitor-log-shipping-transact-sql?view=sql-server-ver15) but again the easiest way is to use SQL Server Management Studio (SSMS), right mouse click on instance then Reports / Standard Reports / Transaction Log Shipping Status menu:

References

- Get started with Log Shipping on Linux

- Business continuity and database recovery – SQL Server on Linux

- About Log Shipping (SQL Server)