Table of contents

Preamble

SQL Server compression methods allow you to compress your data to use less disk space at the cost of a bit of CPU to decompress. Nowadays with full flash big disk array we have, saving a bit on the storage isn’t really an argument. Knowing that modern disk arrays also have compression algorithms and apply thin provisioning (virtual allocation provided only when really used).

But the key argument, for me, is really the amount of IOPS. If your object is smaller on disk then you read less to put it in database memory. But as said you need some extra CPU to decompress and those CPU, in the case of a database server, are very expensive because at the cost of database licences…

Versus Oracle it is more than welcome that Microsoft is providing those features in all editions of SQL Server at no extra cost… Only few enhancements are available with SQL Server Enterprise edition.

We have five methods available:

- Row compression

- Page compression

- COMPRESS/DECOMPRESS

- Columnstore tables and indexes

- Columnstore archive tables and indexes

My testing has been done with SQL Server 2022 developer edition running on a Linux server with 12 cores, 64GB of memory and Red Hat Enterprise Linux release 8.7 (Ootpa).

SQL Server compression test case

Same as my Oracle JSON post (http://), I have downloaded the 1.7m users from JSON Editor Online web site. Loading it in SQL Server and automatically create a test01 table in dbo schema is easy with OPENROWSET function (ensure first you have enough space in the filegroup):

select json01.* into test01 from openrowset(bulk '/tmp/users_1.7m.json', single_clob) as j cross apply openjson(bulkcolumn) with ( id int, name nvarchar(50), city nvarchar(50), age tinyint, friends nvarchar(max) as json ) as json01 |

We can estimate the size of the object with:

1> use testdb 2> go Changed database context to 'testdb'. 1> exec sp_spaceused N'dbo.test01' 2> go name rows reserved data index_size unused -------------------------------------------------------------------------------------------------------------------------------- -------------------- ------------------ ------------------ ------------------ ------------------ test01 1700000 932888 KB 932712 KB 8 KB 168 KB |

So here it is for 932712 KB:

1> select top 1 * from dbo.test01 2> go id name city age friends ----------- -------------------------------------------------- -------------------------------------------------- --- --------------------------------------------------------------------------------------------------------------------------------------------- 0 Elijah Austin 78 [{"name":"Michelle","hobbies":["Watching Sports","Reading","Skiing & Snowboarding"]},{"name":"Robert","hobbies":["Traveling","Video Games"]}] |

I expect quite a good compression ratio as the friends column contains quite repetitive information and name and city are also quite repetitive. We will see that this depend a lot on the chosen algorithm…

SQL Server compression testing

sp_estimate_data_compression_savings

There is a very useful procedure while working with SQL Server compression and it is sp_estimate_data_compression_savings. This procedure returns the estimated disk space you can expect from the chosen compression algorithm:

1> exec sys.sp_estimate_data_compression_savings @schema_name = 'dbo', @object_name = 'test01', @index_id = null, @partition_number = null, @data_compression = 'ROW' 2> go object_name schema_name -------------------------------------------------------------------------------------------------------------------------------- ------------------------------------------------------------------------------------- test01 dbo index_id partition_number size_with_current_compression_setting(KB) size_with_requested_compression_setting(KB) sample_size_with_current_compression_setting(KB) sample_size_with_requested_compression_setting(KB) ----------- ---------------- ----------------------------------------- ------------------------------------------- ------------------------------------------------ -------------------------------------------------- 0 1 932720 904248 40360 39128 |

Tips:

Once the action has been done you can also use sp_spaceused function to get the real disk usage of the object.

Row compression

Row compression is transparent for the application. The process used is roughly to try to store information in a less expensive format. For exemple int in tinyint, char in varchar, nchar in nvarchar and so on whenever possible. As we can see the gain for my test table is close to zero, my table would go from 932720 KB to 904248 KB. Mainly because I have been not too bad when choosing the type of my columns. Maybe the only point where SQL Server can optimize is for my id column that could be store in tinyint and smallint…

create table test01_row ( id int, name nvarchar(50), city nvarchar(50), age tinyint, friends nvarchar(max) ) with (data_compression = row) go insert into test01_row (id, name, city, age, friends) select id, name, city, age, friends from test01 go |

The final size it a bit below the estimated one (895032 KB at the end):

1> exec sp_spaceused N'dbo.test01_row' 2> go name rows reserved data index_size unused -------------------------------------------------------------------------------------------------------------------------------- -------------------- ------------------ ------------------ ------------------ ------------------ test01_row 1700000 895304 KB 895032 KB 8 KB 264 KB |

To compare the difference of access between the two tables I execute:

dbcc dropcleanbuffers go select count(*) from dbo.test01 where age=50 go select count(*) from dbo.test01_row where age=50 go |

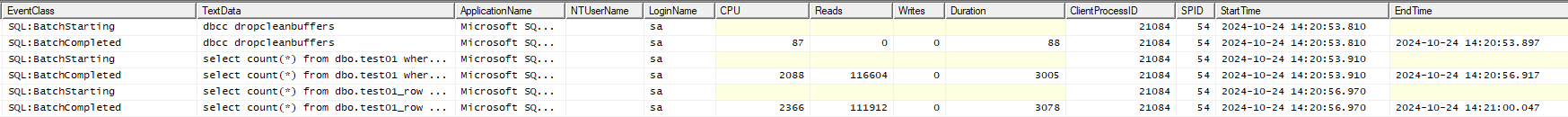

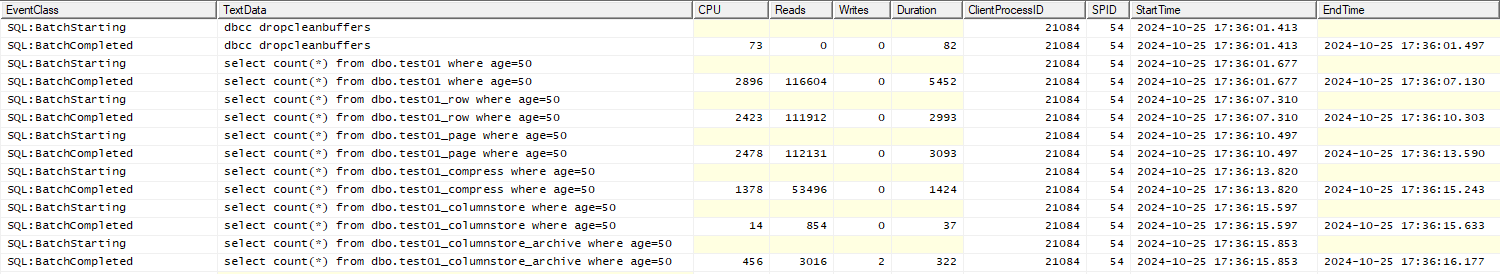

We can see a bit more CPU for the compressed version and a bit less IOPS, the execution time is almost the same:

Page compression

Page compression is also transparent for the application ! The algorithm is clearly going further by replacing recurrent vales in pages by a corresponding pointer on a common value, recurring characters in string are also compressed.

1> exec sys.sp_estimate_data_compression_savings @schema_name = 'dbo', @object_name = 'test01', @index_id = null, @partition_number = null, @data_compression = 'PAGE' 2> go object_name schema_name -------------------------------------------------------------------------------------------------------------------------------- -------------------------------------------------------------------------------------------------------------------------------- test01 dbo index_id partition_number size_with_current_compression_setting(KB) size_with_requested_compression_setting(KB) sample_size_with_current_compression_setting(KB) sample_size_with_requested_compression_setting(KB) ----------- ---------------- ----------------------------------------- ------------------------------------------- ------------------------------------------------ -------------------------------------------------- 0 1 932720 904896 40504 39296 |

I create my test01_page page compressed table with:

create table test01_page ( id int, name nvarchar(50), city nvarchar(50), age tinyint, friends nvarchar(max) ) with (data_compression = page) go insert into test01_page (id, name, city, age, friends) select id, name, city, age, friends from test01 go |

Size is, again, below the estimate but bigger than the row compression. This is unexpected to me has te hobbies in the friends column are repeated quite z lot of times:

1> exec sp_spaceused N'dbo.test01_page' 2> go name rows reserved data index_size unused -------------------------------------------------------------------------------------------------------------------------------- -------------------- ------------------ ------------------ ------------------ ------------------ test01_page 1700000 897032 KB 896896 KB 8 KB 128 KB |

I execute the below test script:

dbcc dropcleanbuffers go select count(*) from dbo.test01 where age=50 go select count(*) from dbo.test01_row where age=50 go select count(*) from dbo.test01_page where age=50 go |

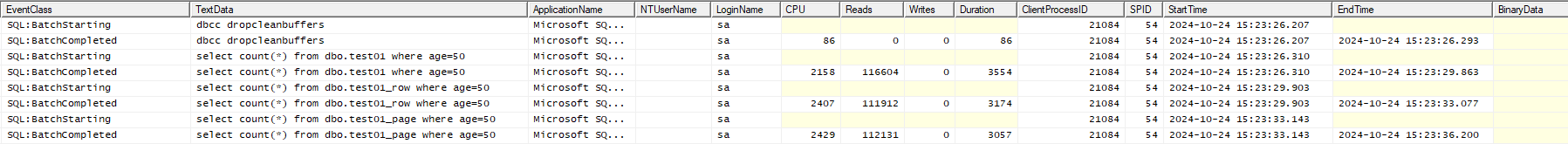

And the result is aligned, more I/O for page compression but the size difference does not significantly generate more CPU:

COMPRESS/DECOMPRESS compression

This column-oriented compression method with GZip is clearly not transparent as you have to store in a binary column the compressed value and each time you want to access it you have to decompress it ! Needless to say that the COMPRESS/DECOMPRESS functions are CPU intensive and it has taken a bit more of 12 minutes to fill my 1.7 millions rows:

create table test01_compress ( id int, name nvarchar(50), city nvarchar(50), age tinyint, friends varbinary(max) ) go 1> set statistics time on 2> go 1> insert into test01_compress (id, name, city, age, friends) 2> select id, name, city, age, compress(friends) 3> from test01 4> go SQL Server parse and compile time: CPU time = 0 ms, elapsed time = 0 ms. SQL Server Execution Times: CPU time = 586626 ms, elapsed time = 728015 ms. (1700000 rows affected) |

It is also not automatic from applicative perspective to make a select:

1> select top 1 id, name, city, age, cast(decompress(friends) as nvarchar(max)) as friends 2> from test01_compress 3> go id name city age friends ----------- -------------------------------------------------- -------------------------------------------------- --- --------------------------------------------------------------------------------------------------------------------------------------------- 0 Elijah Austin 78 [{"name":"Michelle","hobbies":["Watching Sports","Reading","Skiing & Snowboarding"]},{"name":"Robert","hobbies":["Traveling","Video Games"]}] |

But for which size ?:

1> exec sp_spaceused N'dbo.test01_compress' 2> go name rows reserved data index_size unused -------------------------------------------------------------------------------------------------------------------------------- -------------------- ------------------ ------------------ ------------------ ------------------ test01_compress 1700000 427976 KB 427880 KB 8 KB 88 KB |

Size almost divided by two !

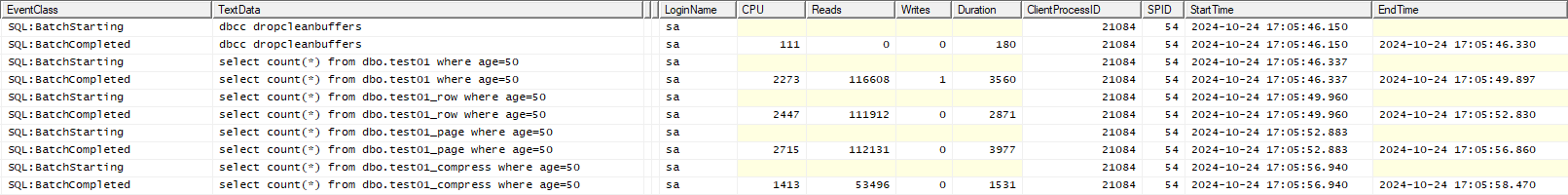

I am reading less from disk and obviously less CPU:

But in my query I am a bit cheating and to see a difference I would really need to use the friend columns like this one that is returning a distinct list of all hobbies. The query on original test01 table:

1> set statistics io on 2> set statistics time on 3> go 1> dbcc dropcleanbuffers 2> go 1> select distinct c.value as hobbie 2> from test01 a 3> cross apply openjson(friends) with ( 4> name nvarchar(50), 5> hobbies nvarchar(max) as json 6> ) as b 7> cross apply openjson(hobbies) as c 8> go |

And the one on test01_compress table with the friends column that is compress, notice the use of DECOMPRESS cpu-intensive function:

1> select distinct c.value as hobbie 2> from test01_compress a 3> cross apply openjson(cast(decompress(friends) as nvarchar(max))) with ( 4> name nvarchar(50), 5> hobbies nvarchar(max) as json 6> ) as b 7> cross apply openjson(hobbies) as c 8> go |

In term of CPU, even which much less IOPS it makes a huge difference. Three times more CPU and three time the base execution time on raw test01 table:

| test01 | test01_compress |

|---|---|

| logical reads 116585 SQL Server Execution Times: CPU time = 339166 ms, elapsed time = 73572 ms | logical reads 53485 SQL Server Execution Times: CPU time = 988766 ms, elapsed time = 210704 ms |

Columnstore compression

As the documentation state the underlining compression algorithm is Microsoft XPRESS. Unlike row and page compression the columnstore compression is replacing your rowstore table format by an index. The command magic command is CREATE COLUMNSTORE INDEX. As you have many options CLUSTERED/NONCLUSTERED or ordered Microsoft is sharing a nice design document to help.

I’m not testing DML on my tables but columnstore and columnstore archive compression are OLAP oriented with queries selecting few columns and accessing rows with low cardinality (low number of distinct values). Let’s first see how much disk saving we can expect:

1> exec sys.sp_estimate_data_compression_savings @schema_name = 'dbo', @object_name = 'test01', @index_id = null, @partition_number = null, @data_compression = 'COLUMNSTORE' 2> go object_name schema_name -------------------------------------------------------------------------------------------------------------------------------- -------------------------------------------------------------------------------------------------------------------------------- test01 dbo index_id partition_number size_with_current_compression_setting(KB) size_with_requested_compression_setting(KB) sample_size_with_current_compression_setting(KB) sample_size_with_requested_compression_setting(KB) ----------- ---------------- ----------------------------------------- ------------------------------------------- ------------------------------------------------ -------------------------------------------------- 0 1 932720 150376 39696 6400 |

Columnstore or columnstore archive cannot be specified as before while creating the table or you get:

This is not a valid data compression setting for this object. It can only be used with columnstore indexes. Please choose NONE, PAGE, or ROW compression. |

You need first to create a rowstore normal table and then convert it to columnstore with:

create table test01_columnstore ( id int, name nvarchar(50), city nvarchar(50), age tinyint, friends nvarchar(max) ) with (data_compression = none) go insert into test01_columnstore (id, name, city, age, friends) select id, name, city, age, friends from test01 go create clustered columnstore index test01_cci on test01_columnstore go |

Finally the space used is:

1> exec sp_spaceused N'dbo.test01_columnstore' 2> go name rows reserved data index_size unused -------------------------------------------------------------------------------------------------------------------------------- -------------------- ------------------ ------------------ ------------------ ------------------ test01_columnstore 1700000 151760 KB 151600 KB 0 KB 160 KB |

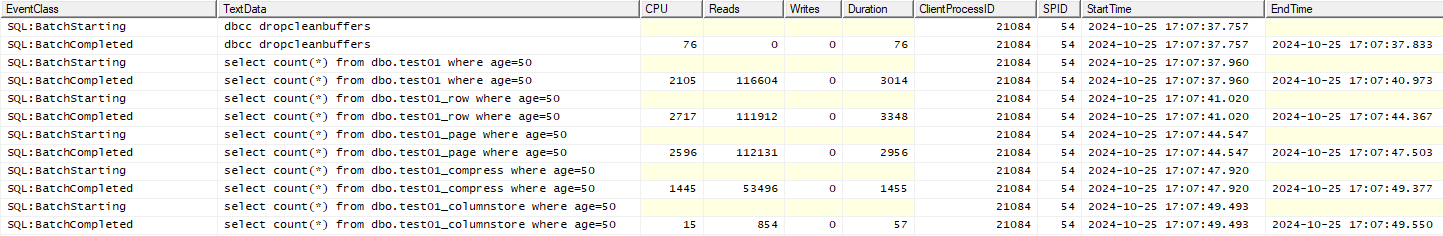

Response time is quite amazing, CPU divided by more than 100 and IOPS also divided by more than 100:

Columnstore archive compression

As written in the documentation I can now create directly the table in columnstore format and I do not need to go through the rowstore step:

Starting with SQL Server 2016 (13.x), you can create the table as a clustered columnstore index. It’s no longer necessary to first create a rowstore table and then convert it to a clustered columnstore index.

The expected saving is almost the same as columnstore format:

1> exec sys.sp_estimate_data_compression_savings @schema_name = 'dbo', @object_name = 'test01', @index_id = null, @partition_number = null, @data_compression = 'COLUMNSTORE_ARCHIVE' 2> go object_name schema_name -------------------------------------------------------------------------------------------------------------------------------- -------------------------------------------------------------------------------------------------------------------------------- test01 dbo index_id partition_number size_with_current_compression_setting(KB) size_with_requested_compression_setting(KB) sample_size_with_current_compression_setting(KB) sample_size_with_requested_compression_setting(KB) ----------- ---------------- ----------------------------------------- ------------------------------------------- ------------------------------------------------ -------------------------------------------------- 0 1 932720 150184 39944 6432 |

So create a columnstore archive index table directly:

create table test01_columnstore_archive ( id int, name nvarchar(50), city nvarchar(50), age tinyint, friends nvarchar(max), index test01_cci_archive clustered columnstore with (data_compression = columnstore_archive) ) go insert into test01_columnstore_archive (id, name, city, age, friends) select id, name, city, age, friends from test01 go |

Again, strangely the size is bigger than the “simple” columnstore table. Which is almost killing this compression method:

1> exec sp_spaceused N'dbo.test01_columnstore_archive' 2> go name rows reserved data index_size unused -------------------------------------------------------------------------------------------------------------------------------- -------------------- ------------------ ------------------ ------------------ ------------------ test01_columnstore_archive 1700000 156504 KB 155720 KB 16 KB 768 KB |

And the response time for columnstore archive is worse than simple columnstore so wondering the real advantage of this format:

SQL Server compression wrap up

All in one in a graphic it gives (IOPS on left y-axis):

As I have seen on many blog posts I would stop at the columnstore compression and avoid columnstore archive compression…

Also keep in mind that those SQL Server compression algorithms should be mainly used for static objects. So particularly suitable for DataWareHouse environment and star/snowflake schemas with big fact tables.

References

- Compressing Big Static SQL Server Tables

- When to Use Row or Page Compression in SQL Server

- Demonstrating the Effects of Using Data Compression in SQL Server

- Data Compression in SQL Server – Pros and Cons