Table of contents

Preamble

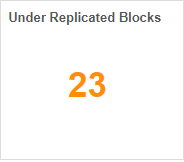

In Ambari, and in HDFS more precisely, there are two widgets that will jump to your eyes if they are not equal to zero. They are Blocks With Corrupted Replicas and Under Replicated Blocks. In the graphical interface this is something like:

Managing under replicated blocks

You get a complete list of impacted file with this command. The grep command is simply to remove all the lines with multiple point symbol:

hdfs@client_node:~$ hdfs fsck / | egrep -v '^\.+' Connecting to namenode via http://namenode01.domain.com:50070/fsck?ugi=hdfs&path=%2F FSCK started by hdfs (auth:SIMPLE) from /10.75.144.5 for path / at Tue Jul 23 14:58:19 CEST 2019 /tmp/hive/training/dda2d815-27d3-43e0-9d3b-4aea3983c1a9/hive_2018-09-18_12-13-21_550_5155956994526391933-12/_tez_scratch_dir/split_Map_1/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1087250107_13523212. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/ambari-qa/.staging/job_1525749609269_2679/libjars/hive-shims-0.23-1.2.1000.2.6.4.0-91.jar: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1074437588_697325. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/ambari-qa/.staging/job_1525749609269_2679/libjars/hive-shims-common-1.2.1000.2.6.4.0-91.jar: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1074437448_697185. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/hdfs/.staging/job_1541585350344_0008/job.jar: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1092991377_19266614. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/hdfs/.staging/job_1541585350344_0008/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1092991378_19266615. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/hdfs/.staging/job_1541585350344_0041/job.jar: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1093022580_19297823. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/hdfs/.staging/job_1541585350344_0041/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1093022581_19297824. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1519657336782_0105/job.jar: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1073754565_13755. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1519657336782_0105/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1073754566_13756. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1519657336782_0105/libjars/hive-hcatalog-core.jar: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1073754564_13754. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536057043538_0001/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1085621525_11894367. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536057043538_0002/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1085621527_11894369. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536057043538_0004/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1085621593_11894435. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536057043538_0023/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1085622064_11894906. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536057043538_0025/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1085622086_11894928. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536057043538_0027/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1085622115_11894957. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536057043538_0028/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1085622133_11894975. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536642465198_0002/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1086397707_12670663. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536642465198_0003/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1086397706_12670662. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536642465198_0004/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1086397708_12670664. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536642465198_0005/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1086397718_12670674. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536642465198_0006/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1086397720_12670676. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536642465198_0007/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1086397721_12670677. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). /user/training/.staging/job_1536642465198_2307/job.split: Under replicated BP-1711156358-10.75.144.1-1519036486930:blk_1086509846_12782817. Target Replicas is 10 but found 8 live replica(s), 0 decommissioned replica(s) and 0 decommissioning replica(s). Total size: 25053842686185 B (Total open files size: 33152660866 B) Total dirs: 1500114 Total files: 12517972 Total symlinks: 0 (Files currently being written: 268) Total blocks (validated): 12534979 (avg. block size 1998714 B) (Total open file blocks (not validated): 325) Minimally replicated blocks: 12534979 (100.0 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 24 (1.9146422E-4 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 2.9715698 Corrupt blocks: 0 Missing replicas: 48 (1.2886386E-4 %) Number of data-nodes: 8 Number of racks: 2 FSCK ended at Tue Jul 23 15:01:08 CEST 2019 in 169140 milliseconds The filesystem under path '/' is HEALTHY |

All those files have the strange “Target Replicas is 10 but found 8 live replica(s)” message while our default replication factor is 3, so I have decided to set it back to 3 with:

hdfs@client_node:~$ hdfs dfs -setrep 3 /user/training/.staging/job_1536642465198_2307/job.split Replication 3 set: /user/training/.staging/job_1536642465198_2307/job.split |

And it solved the issue (24 to 23 under replicated blocks):

Total size: 25009165459559 B (Total open files size: 1006863 B) Total dirs: 1500150 Total files: 12528930 Total symlinks: 0 (Files currently being written: 47) Total blocks (validated): 12545325 (avg. block size 1993504 B) (Total open file blocks (not validated): 43) Minimally replicated blocks: 12545325 (100.0 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 23 (1.8333523E-4 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 2.9715445 Corrupt blocks: 0 Missing replicas: 46 (1.2339374E-4 %) Number of data-nodes: 8 Number of racks: 2 FSCK ended at Tue Jul 23 15:49:27 CEST 2019 in 155922 milliseconds |

That we also see graphically under Ambari:

There is also an option to delete the files if it has no interested or if, like for me, this is a temporary old file like this one of my list:

hdfs@client_node:~$ hdfs dfs -ls -d /tmp/hive/training/dda2d815* drwx------ - training hdfs 0 2018-09-18 12:53 /tmp/hive/training/dda2d815-27d3-43e0-9d3b-4aea3983c1a9 |

Then deleting it and skipping the trash (no recover possible, if you are unsure keep the trash activated, but you will have to wait that HDFS purge the trash after fs.trash.interval minutes):

hdfs@client_node:~$ hdfs dfs -rm -r -f -skipTrash /tmp/hive/training/dda2d815-27d3-43e0-9d3b-4aea3983c1a9 Deleted /tmp/hive/training/dda2d815-27d3-43e0-9d3b-4aea3983c1a9 |

Obviously same exact result:

Total size: 25019302903260 B (Total open files size: 395112 B) Total dirs: 1439889 Total files: 12474754 Total symlinks: 0 (Files currently being written: 69) Total blocks (validated): 12491149 (avg. block size 2002962 B) (Total open file blocks (not validated): 57) Minimally replicated blocks: 12491149 (100.0 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 22 (1.7612471E-4 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 2.971359 Corrupt blocks: 0 Missing replicas: 44 (1.185481E-4 %) Number of data-nodes: 8 Number of racks: 2 FSCK ended at Tue Jul 23 16:18:51 CEST 2019 in 141604 milliseconds |

Managing blocks with corrupted replicas

Simulating corrupted blocks is not piece of cake and even if my Ambari display is showing corrupted blocks, in reality I have none:

hdfs@client_node:~$ hdfs fsck -list-corruptfileblocks Connecting to namenode via http://namenode01.domain.com:50070/fsck?ugi=hdfs&listcorruptfileblocks=1&path=%2F The filesystem under path '/' has 0 CORRUPT files |

On a TI environment where plenty of people have played with I finally got the (hopefully) rare message of corrupted blocks. In real life it should not happen as by default your HDFS replication factor is 3 (It even forbid me to start HBase):

[hdfs@client_node ~]$ hdfs dfsadmin -report

Configured Capacity: 6139207680 (5.72 GB)

Present Capacity: 5701219000 (5.31 GB)

DFS Remaining: 2659930112 (2.48 GB)

DFS Used: 3041288888 (2.83 GB)

DFS Used%: 53.34%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 10

Missing blocks (with replication factor 1): 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Pending deletion blocks: 0

.

.

More precisely:

[hdfs@client_node ~]$ hdfs fsck -list-corruptfileblocks Connecting to namenode via http://namenode01.domain.com:50070/fsck?ugi=hdfs&listcorruptfileblocks=1&path=%2F The list of corrupt files under path '/' are: blk_1073744038 /hdp/apps/3.0.0.0-1634/mapreduce/mapreduce.tar.gz blk_1073744039 /hdp/apps/3.0.0.0-1634/mapreduce/mapreduce.tar.gz blk_1073744040 /hdp/apps/3.0.0.0-1634/mapreduce/mapreduce.tar.gz blk_1073744041 /hdp/apps/3.0.0.0-1634/yarn/service-dep.tar.gz blk_1073744042 /apps/hbase/data/hbase.version blk_1073744043 /apps/hbase/data/hbase.id blk_1073744044 /apps/hbase/data/data/hbase/meta/1588230740/.regioninfo blk_1073744045 /apps/hbase/data/data/hbase/meta/.tabledesc/.tableinfo.0000000001 blk_1073744046 /apps/hbase/data/MasterProcWALs/pv2-00000000000000000001.log blk_1073744047 /apps/hbase/data/WALs/datanode01.domain.com,16020,1576839178870/datanode01.domain.com%2C16020%2C1576839178870.1576839191114 The filesystem under path '/' has 10 CORRUPT files |

Here is why I was not able to start component related to HBAse and even HBase daemon was failing:

[hdfs@client_node ~]$ hdfs dfs -cat /apps/hbase/data/hbase.version 19/12/20 15:41:12 WARN hdfs.DFSClient: No live nodes contain block BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 after checking nodes = [], ignoredNodes = null 19/12/20 15:41:12 INFO hdfs.DFSClient: No node available for BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 file=/apps/hbase/data/hbase.version 19/12/20 15:41:12 INFO hdfs.DFSClient: Could not obtain BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 from any node: No live nodes contain current block Block locations: Dead nodes: . Will get new block locations from namenode and retry... 19/12/20 15:41:12 WARN hdfs.DFSClient: DFS chooseDataNode: got # 1 IOException, will wait for 2312.7779354694017 msec. 19/12/20 15:41:14 WARN hdfs.DFSClient: No live nodes contain block BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 after checking nodes = [], ignoredNodes = null 19/12/20 15:41:14 INFO hdfs.DFSClient: No node available for BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 file=/apps/hbase/data/hbase.version 19/12/20 15:41:14 INFO hdfs.DFSClient: Could not obtain BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 from any node: No live nodes contain current block Block locations: Dead nodes: . Will get new block locations from namenode and retry... 19/12/20 15:41:14 WARN hdfs.DFSClient: DFS chooseDataNode: got # 2 IOException, will wait for 8143.439259842901 msec. 19/12/20 15:41:22 WARN hdfs.DFSClient: No live nodes contain block BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 after checking nodes = [], ignoredNodes = null 19/12/20 15:41:22 INFO hdfs.DFSClient: No node available for BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 file=/apps/hbase/data/hbase.version 19/12/20 15:41:22 INFO hdfs.DFSClient: Could not obtain BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 from any node: No live nodes contain current block Block locations: Dead nodes: . Will get new block locations from namenode and retry... 19/12/20 15:41:22 WARN hdfs.DFSClient: DFS chooseDataNode: got # 3 IOException, will wait for 11760.036759939097 msec. 19/12/20 15:41:34 WARN hdfs.DFSClient: No live nodes contain block BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 after checking nodes = [], ignoredNodes = null 19/12/20 15:41:34 WARN hdfs.DFSClient: Could not obtain block: BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 file=/apps/hbase/data/hbase.version No live nodes contain current block Block locations: Dead nodes: . Throwing a BlockMissingException 19/12/20 15:41:34 WARN hdfs.DFSClient: No live nodes contain block BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 after checking nodes = [], ignoredNodes = null 19/12/20 15:41:34 WARN hdfs.DFSClient: Could not obtain block: BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 file=/apps/hbase/data/hbase.version No live nodes contain current block Block locations: Dead nodes: . Throwing a BlockMissingException 19/12/20 15:41:34 WARN hdfs.DFSClient: DFS Read org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 file=/apps/hbase/data/hbase.version at org.apache.hadoop.hdfs.DFSInputStream.refetchLocations(DFSInputStream.java:870) at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:853) at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:832) at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:564) at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:754) at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:820) at java.io.DataInputStream.read(DataInputStream.java:100) at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:94) at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:68) at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:129) at org.apache.hadoop.fs.shell.Display$Cat.printToStdout(Display.java:101) at org.apache.hadoop.fs.shell.Display$Cat.processPath(Display.java:96) at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:331) at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:303) at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:285) at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:269) at org.apache.hadoop.fs.shell.FsCommand.processRawArguments(FsCommand.java:120) at org.apache.hadoop.fs.shell.Command.run(Command.java:176) at org.apache.hadoop.fs.FsShell.run(FsShell.java:328) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90) at org.apache.hadoop.fs.FsShell.main(FsShell.java:391) cat: Could not obtain block: BP-369465004-10.75.46.68-1539340329592:blk_1073744042_3218 file=/apps/hbase/data/hbase.version |

Here no particular miracle on how to solve it:

[hdfs@client_node ~]$ hdfs fsck / -delete Connecting to namenode via http://namenode01.domain.com:50070/fsck?ugi=hdfs&delete=1&path=%2F FSCK started by hdfs (auth:SIMPLE) from /10.75.46.69 for path / at Fri Dec 20 15:41:53 CET 2019 /apps/hbase/data/MasterProcWALs/pv2-00000000000000000001.log: MISSING 1 blocks of total size 34358 B. /apps/hbase/data/WALs/datanode01.domain.com,16020,1576839178870/datanode01.domain.com%2C16020%2C1576839178870.1576839191114: MISSING 1 blocks of total size 98 B. /apps/hbase/data/data/hbase/meta/.tabledesc/.tableinfo.0000000001: MISSING 1 blocks of total size 996 B. /apps/hbase/data/data/hbase/meta/1588230740/.regioninfo: MISSING 1 blocks of total size 32 B. /apps/hbase/data/hbase.id: MISSING 1 blocks of total size 42 B. /apps/hbase/data/hbase.version: MISSING 1 blocks of total size 7 B. /hdp/apps/3.0.0.0-1634/mapreduce/mapreduce.tar.gz: MISSING 3 blocks of total size 306766434 B. /hdp/apps/3.0.0.0-1634/yarn/service-dep.tar.gz: MISSING 1 blocks of total size 92348160 B. Status: CORRUPT Number of data-nodes: 3 Number of racks: 1 Total dirs: 780 Total symlinks: 0 Replicated Blocks: Total size: 1404802556 B Total files: 190 (Files currently being written: 1) Total blocks (validated): 47 (avg. block size 29889416 B) ******************************** UNDER MIN REPL'D BLOCKS: 10 (21.276596 %) MINIMAL BLOCK REPLICATION: 1 CORRUPT FILES: 8 MISSING BLOCKS: 10 MISSING SIZE: 399150127 B ******************************** Minimally replicated blocks: 37 (78.723404 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 0 (0.0 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 2.3617022 Missing blocks: 10 Corrupt blocks: 0 Missing replicas: 0 (0.0 %) Erasure Coded Block Groups: Total size: 0 B Total files: 0 Total block groups (validated): 0 Minimally erasure-coded block groups: 0 Over-erasure-coded block groups: 0 Under-erasure-coded block groups: 0 Unsatisfactory placement block groups: 0 Average block group size: 0.0 Missing block groups: 0 Corrupt block groups: 0 Missing internal blocks: 0 FSCK ended at Fri Dec 20 15:41:54 CET 2019 in 306 milliseconds The filesystem under path '/' is CORRUPT |

Finally solved (with data loss of course):

[hdfs@client_node ~]$ hdfs fsck -list-corruptfileblocks Connecting to namenode via http://namenode01.domain.com:50070/fsck?ugi=hdfs&listcorruptfileblocks=1&path=%2F The filesystem under path '/' has 0 CORRUPT files |

And:

[hdfs@client_node ~]$ hdfs dfsadmin -report Configured Capacity: 6139207680 (5.72 GB) Present Capacity: 5701216450 (5.31 GB) DFS Remaining: 2659930112 (2.48 GB) DFS Used: 3041286338 (2.83 GB) DFS Used%: 53.34% Replicated Blocks: Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 Missing blocks (with replication factor 1): 0 Pending deletion blocks: 0 Erasure Coded Block Groups: Low redundancy block groups: 0 Block groups with corrupt internal blocks: 0 Missing block groups: 0 Pending deletion blocks: 0 |

References

- HDFS Commands Guide

- How to use hdfs fsck command to identify corrupted files?

- Hdfs Admin Troubleshooting Corrupted Missing Blocks

- How to Fix Corrupt Blocks And Under Replicated Blocks in HDFS