Table of contents

Preamble

Hadoop backup, wide and highly important subject and most probably like me you have been surprised by poor availability of official documents and this is most probably why you have landed here trying to find a first answer ! Needless to say this blog post is far to be complete so please do not hesitate to submit a comment and I would enrich this document with great pleasure !

One of the main difficulty with Hadoop is its scale out natural essence that’s make difficult to understand what’s nice to backup and what is REALLY important to backup.

I have split the article in three parts:

- First part is what you MUST backup to be able to survive to a major issue

- Second part is what is not required to be backed-up.

- Third part is what is nice to backup.

Also, I repeat, I’m interested by any comment you might have that would help to enrich this document or correct any mistake…

Mandatory parts to backup

Configuration files

All files under /etc and /usr/hdp on edge nodes (so not for your workers nodes). On the principle you could recreate them from scratch but you surely do not want to loose multiple months or years of fine tuning isn’t it ?

Theoretically all your configuration files will be saved when saving Ambari server meta info but if you have a corporate tool to backup your host OS it is worth putting the two above directories as it is sometimes much simpler to restore a single files from those tools…

Those edge nodes are:

- Master nodes

- Management nodes

- Client nodes

- Utilities node (Hive, …)

- Analytics Nodes

In other words all except worker nodes..

Ambari server meta info

[root@mgmtserver ~]# ambari-server backup /tmp/ambari-server-backup.zip Using python /usr/bin/python Backing up Ambari File System state... *this will not backup the server database* Backup requested. Backup process initiated. Creating zip file... Zip file created at /tmp/ambari-server-backup.zip Backup complete. Ambari Server 'backup' completed successfully. [root@mgmtserver ~]# ll /tmp/ambari-server-backup.zip -rw-r--r-- 1 root root 2444590592 Dec 3 17:01 /tmp/ambari-server-backup.zip |

To restore this backup in case of a big crash the command is:

[root@mgmtserver ~]# ambari-server restore /tmp/ambari-server-backup.zip |

NameNode metadata

As they say in NameNode this component is key:

The NameNode is the centerpiece of an HDFS file system. It keeps the directory tree of all files in the file system, and tracks where across the cluster the file data is kept. It does not store the data of these files itself.

While it’s not an Oracle database and a continuous backup is not possible:

Regardless of the solution, a full, up-to-date continuous backup of the namespace is not possible. Some of the most recent data is always lost. HDFS is not an Online Transaction Processing (OLTP) system. Most data can be easily recreated if you re-run Extract, Transform, Load (ETL) or processing jobs.

The always working procedure to do a backup of your NameNode is really simple:

[hdfs@namenode_primary ~]$ hdfs dfsadmin -saveNamespace saveNamespace: Safe mode should be turned ON in order to create namespace image. [hdfs@namenode_primary ~]$ hdfs dfsadmin -safemode enter Safe mode is ON [hdfs@namenode_primary ~]$ hdfs dfsadmin -safemode get Safe mode is ON [hdfs@namenode_primary ~]$ hdfs dfsadmin -saveNamespace Save namespace successful [hdfs@namenode_primary ~]$ hdfs dfsadmin -safemode leave Safe mode is OFF [hdfs@namenode_primary ~]$ hdfs dfsadmin -safemode get Safe mode is OFF [hdfs@namenode_primary ~]$ hdfs dfsadmin -fetchImage /tmp 19/01/07 12:57:10 INFO namenode.TransferFsImage: Opening connection to http://namenode_primary.domain.com:50070/imagetransfer?getimage=1&txid=latest 19/01/07 12:57:10 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds 19/01/07 12:57:10 INFO namenode.TransferFsImage: Combined time for fsimage download and fsync to all disks took 0.04s. The fsimage download took 0.04s at 167097.56 KB/s. Synchronous (fsync) write to disk of /tmp/fsimage_0000000000002300573 took 0.00s. |

Then you can put in a safe place (tape, SAN, NFS, …) the file that has been copied to /tmp directory. But this has anyways the bad idea to put your entire cluster in read only mode (safemode), so in a 24/7 production cluster this is surely not something you can accept…

All your running processes will end with something like:

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot complete file /apps/hive/warehouse/database.db/table_orc/.hive-staging_hive_2019-02-12_06-12-16_976_7305679997226277861-21596/_task_tmp.-ext-10002/_tmp.000199_0. Name node is in safe mode. It was turned on manually. Use "hdfs dfsadmin -safemode leave" to turn safe mode off. |

In the initial releases of Hadoop the NameNode was a Single Point Of Failure (SPOF) as you could have only what is called a secondary NameNode. The secondary NameNode handle an important CPU intensive task called checkpointing. Checkpointing is the operation to combine edits logs files (edits_xx files) and latest fsimage file to create an up to date HDFS filesystem metadata snapshot (fsimage_xxx file). But the secondary NameNode cannot be used as a failover of the primary NameNode so in case of failure is can only be used to rebuild the primary NameNode, not to take his role.

In Haddop 2.0 this limitation has gone and in an High Availability (HA) mode you can have a standby NameNode that does same job as secondary NameNode and can also take the role of the primary NameNode by a simple switch.

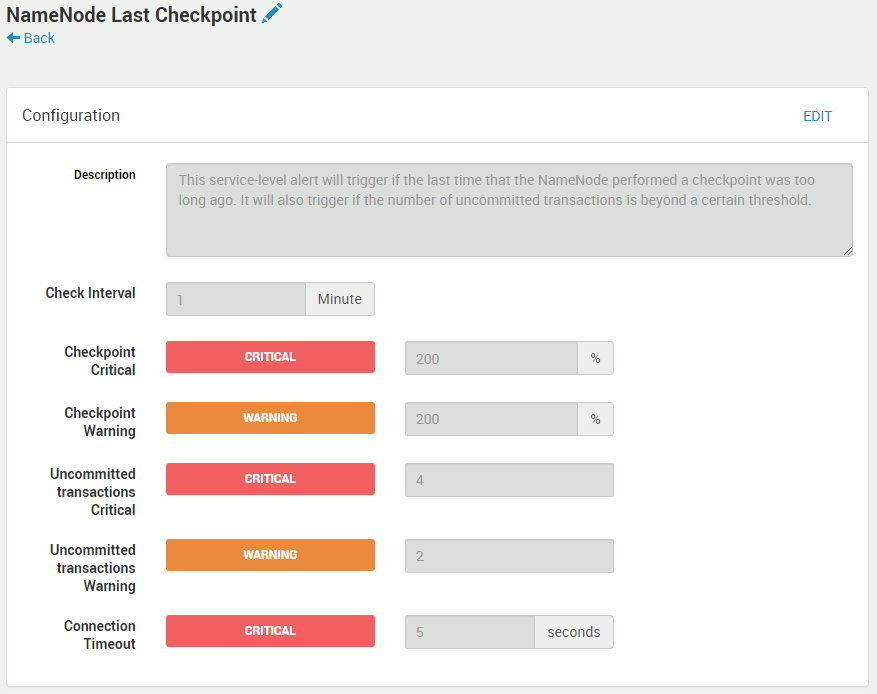

If for any reason this checkpoint operation has not happened since long you will receive the scary NameNode Last Checkpoint Ambari alert:

This alert will also trigger below Ambari warning when you will try to stop NameNode process (when the NameNode restart is read the latest fsimage and re-apply to it all the edits log files generated since):

Needless to say that having your NameNode service in High Availability (active/standby) is strongly suggested !

Whether you have NameNode in HA or not there is a list of important parameters to consider with the value we have chosen, maybe I should decrease the checkpoint period value:

- dfs.namenode.name.dir = /hadoop/hdfs

- dfs.namenode.checkpoint.period = 21600 (in seconds i.e. 6 hours)

- dfs.namenode.checkpoint.txns = 1000000

- dfs.namenode.checkpoint.check.period = 60

But in this case on your standby or secondary NameNode every dfs.namenode.checkpoint.period or every dfs.namenode.checkpoint.txns whichever is reached first you will have a new checkpoint file and the cool thing is that this latest checkpoint is copied back to your active NameNode. In below the checkpoint at 07:08 is the periodic automatic checkpoint while the one at 06:15 is the one we have explicitly done with a hdfs dfsadmin -saveNamespace command.

On standby NameNode:

[root@namenode_standby ~]# ll -rt /hadoop/hdfs/current/fsimage* -rw-r--r-- 1 hdfs hadoop 650179252 Feb 13 06:15 /hadoop/hdfs/current/fsimage_0000000000520456166 -rw-r--r-- 1 hdfs hadoop 62 Feb 13 06:15 /hadoop/hdfs/current/fsimage_0000000000520456166.md5 -rw-r--r-- 1 hdfs hadoop 650235574 Feb 13 07:08 /hadoop/hdfs/current/fsimage_0000000000520466841 -rw-r--r-- 1 hdfs hadoop 62 Feb 13 07:08 /hadoop/hdfs/current/fsimage_0000000000520466841.md5 |

On active Namenode:

[root@namenode_primary ~]# ll -rt /hadoop/hdfs/current/fsimage* -rw-r--r-- 1 hdfs hadoop 62 Feb 13 06:15 /hadoop/hdfs/current/fsimage_0000000000520456198.md5 -rw-r--r-- 1 hdfs hadoop 650179470 Feb 13 06:15 /hadoop/hdfs/current/fsimage_0000000000520456198 -rw-r--r-- 1 hdfs hadoop 650235574 Feb 13 07:08 /hadoop/hdfs/current/fsimage_0000000000520466841 -rw-r--r-- 1 hdfs hadoop 62 Feb 13 07:08 /hadoop/hdfs/current/fsimage_0000000000520466841.md5 |

So in a NameNode HA cluster you can just copy regularly the dfs.namenode.name.dir to a safe place (tape, NFS, …) and you are not obliged to enter this impacting safemode…

If at a point in time you don’t have Ambari and/or you want to script it here is the commands to get your active and standby NameNode servers:

[hdfs@namenode_primary ~]$ hdfs getconf -confKey dfs.ha.namenodes.mycluster nn1,nn2 [hdfs@namenode_primary ~]$ hdfs getconf -confKey dfs.namenode.rpc-address.mycluster.nn1 namenode_standby.domain.com:8020 [hdfs@namenode_primary ~]$ hdfs getconf -confKey dfs.namenode.rpc-address.mycluster.nn2 namenode_primary.domain.com:8020 [hdfs@namenode_primary ~]$ hdfs haadmin -getServiceState nn1 standby [hdfs@namenode_primary ~]$ hdfs haadmin -getServiceState nn2 active |

Ambari repository database

Our Ambari repository database is a PostgreSQL one, if you have chosen MySQL refer to next chapter.

Backup with Point In Time Recovery (PITR) capability

As clearly explained in the documentation there is a tool to do it called pg_basebackup. To use it you need to put your PostgreSQL instance in write ahead log (WAL) mode that is equivalent of binary logging of MySQL or archive log mode of Oracle. This is done by setting three parameters in postgresql.conf file:

- wal_level = replica

- archive_mode = on

- archive_command = ‘test ! -f /var/lib/pgsql/backups/%f && cp %p /var/lib/pgsql/backups/%f’

Remark:

The archive command that has been chosen is just an example that will copy WAL files to a backup directory that you obviously need to save to a secure place.

If not done you will end up with below error message:

[postgres@fedora1 ~]$ pg_basebackup --pgdata=/tmp/pgbackup01 pg_basebackup: could not get write-ahead log end position from server: ERROR: could not open file "./.postgresql.conf.swp": Permission denied pg_basebackup: removing data directory "/tmp/pgbackup01" |

Once done and activated (restart required) you can make and online backup that can be used to perform PITR with:

[postgres@fedora1 ~]$ pg_basebackup --pgdata=/tmp/pgbackup01 [postgres@fedora1 ~]$ ll /tmp/pgbackup01 total 52 -rw------- 1 postgres postgres 206 Nov 30 18:02 backup_label drwx------ 6 postgres postgres 120 Nov 30 18:02 base -rw------- 1 postgres postgres 30 Nov 30 18:02 current_logfiles drwx------ 2 postgres postgres 1220 Nov 30 18:02 global drwx------ 2 postgres postgres 80 Nov 30 18:02 log drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_commit_ts drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_dynshmem -rw------- 1 postgres postgres 4414 Nov 30 18:02 pg_hba.conf -rw------- 1 postgres postgres 1636 Nov 30 18:02 pg_ident.conf drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_log drwx------ 4 postgres postgres 100 Nov 30 18:02 pg_logical drwx------ 4 postgres postgres 80 Nov 30 18:02 pg_multixact drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_notify drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_replslot drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_serial drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_snapshots drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_stat drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_stat_tmp drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_subtrans drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_tblspc drwx------ 2 postgres postgres 40 Nov 30 18:02 pg_twophase -rw------- 1 postgres postgres 3 Nov 30 18:02 PG_VERSION drwx------ 3 postgres postgres 80 Nov 30 18:02 pg_wal drwx------ 2 postgres postgres 60 Nov 30 18:02 pg_xact -rw------- 1 postgres postgres 88 Nov 30 18:02 postgresql.auto.conf -rw------- 1 postgres postgres 22848 Nov 30 18:02 postgresql.conf [postgres@fedora1 pg_wal]$ ll /var/lib/pgsql/backups/ total 32772 -rw------- 1 postgres postgres 16777216 Nov 30 18:02 000000010000000000000002 -rw------- 1 postgres postgres 16777216 Nov 30 18:02 000000010000000000000003 -rw------- 1 postgres postgres 302 Nov 30 18:02 000000010000000000000003.00000060.backup [postgres@fedora1 pg_wal]$ cat /var/lib/pgsql/backups/000000010000000000000003.00000060.backup START WAL LOCATION: 0/3000060 (file 000000010000000000000003) STOP WAL LOCATION: 0/3000130 (file 000000010000000000000003) CHECKPOINT LOCATION: 0/3000098 BACKUP METHOD: streamed BACKUP FROM: master START TIME: 2018-11-30 18:02:03 CET LABEL: pg_basebackup base backup STOP TIME: 2018-11-30 18:02:03 CET [postgres@fedora1 pg_wal]$ ll /var/lib/pgsql/data/pg_wal/ total 49156 -rw------- 1 postgres postgres 16777216 Nov 30 18:02 000000010000000000000002 -rw------- 1 postgres postgres 16777216 Nov 30 18:02 000000010000000000000003 -rw------- 1 postgres postgres 302 Nov 30 18:02 000000010000000000000003.00000060.backup -rw------- 1 postgres postgres 16777216 Nov 30 18:02 000000010000000000000004 drwx------ 2 postgres postgres 133 Nov 30 18:02 archive_status [postgres@fedora1 pg_wal]$ ll /var/lib/pgsql/data/pg_wal/archive_status/ total 0 -rw------- 1 postgres postgres 0 Nov 30 18:02 000000010000000000000002.done -rw------- 1 postgres postgres 0 Nov 30 18:02 000000010000000000000003.00000060.backup.done -rw------- 1 postgres postgres 0 Nov 30 18:02 000000010000000000000003.done |

You can also directly generate TAR files with:

[postgres@fedora1 pg_wal]$ pg_basebackup --pgdata=/tmp/pgbackup02 --format=t [postgres@fedora1 pg_wal]$ ll /tmp/pgbackup02 total 48128 -rw-r--r-- 1 postgres postgres 32500224 Nov 30 18:11 base.tar -rw------- 1 postgres postgres 16778752 Nov 30 18:11 pg_wal.tar |

Backup with no PITR capability

This method is obviously based on the creation of a dump file. Either you use pg_dump or pg_dumpall.

At this stage either you do all with postgres Linux account that is able to connect in a password less fashion, thanks to default pg_hba.conf file:

# TYPE DATABASE USER ADDRESS METHOD # "local" is for Unix domain socket connections only local all postgres peer # IPv4 local connections: host all postgres 127.0.0.1/32 ident # IPv6 local connections: host all postgres ::1/128 ident # Allow replication connections from localhost, by a user with the # replication privilege. local replication postgres peer host replication postgres 127.0.0.1/32 ident host replication postgres ::1/128 ident |

Or you set it for another account that has less privileges, the owner of the database you want to backup for example. I initially tried with PGPASSWORD but this is apparently not working anymore in later releases of PostgreSQL (10.6 for the release I have used to test the feature):

[postgres@fedora1 ~]$ export PGPASSWORD='secure_password' [postgres@fedora1 ~]$ echo $PGPASSWORD secure_password [postgres@fedora1 ~]$ psql --dbname=ambari --username=ambari --password Password for user ambari: |

Our Ambari repository is older (9.2.23) but to prepare future better to move to password file. A password file is file called ~/.pgpass and having below structure:

hostname:port:database:username:password |

I have created it like:

[postgres@fedora1 ~]$ ll /var/lib/pgsql/.pgpass -rw-r--r-- 1 postgres postgres 37 Nov 30 15:12 /var/lib/pgsql/.pgpass [postgres@fedora1 ~]$ cat /var/lib/pgsql/.pgpass localhost:5432:ambari:ambari:secure_password |

The file must be 600 or less or you will get:

[postgres@fedora1 ~]$ psql --dbname=ambari --username=ambari WARNING: password file "/var/lib/pgsql/.pgpass" has group or world access; permissions should be u=rw (0600) or less Password for user ambari: |

Then you can connect without specifying a password:

[postgres@fedora1 ~]$ psql --dbname=ambari --username=ambari psql (10.6) Type "help" for help. ambari=> |

All this to do a backup off all databases with:

[postgres@fedora1 ~]$ pg_dumpall --file=/tmp/pgbackup.sql [postgres@fedora1 ~]$ ll /tmp/pgbackup.sql -rw-r--r-- 1 postgres postgres 3768 Nov 30 16:55 /tmp/pgbackup.sql |

Or just the Ambari one with:

[postgres@fedora1 ~]$ pg_dump --file=/tmp/pgbackup_ambari.sql ambari [postgres@fedora1 ~]$ ll /tmp/pgbackup_ambari.sql -rw-r--r-- 1 postgres postgres 1117 Nov 30 16:57 /tmp/pgbackup_ambari.sql |

Hive repository database

Our Hive repository database is a MySQL one, if you have chosen PostgreSQL refer to previous chapter.

Backup with Point In Time Recovery (PITR) capability

You must activate binary log by setting log-bin parameter in my.cnf file with something like (MOCA https://blog.yannickjaquier.com/mysql/mysql-replication-with-global-transaction-identifiers-gtid-hands-on.html:

log-bin = /mysql/logs/mysql01/mysql-bin |

You should end up with below configuration:

+---------------------------------+------------------------------------+ | Variable_name | Value | +---------------------------------+------------------------------------+ | log_bin | ON | | log_bin_basename | /mysql/logs/mysql01/mysql-bin | | log_bin_index | /mysql/logs/mysql01mysql-bin.index | +---------------------------------+------------------------------------+ |

First you must regularly backup the MySQL binary logs !

Before any online backup (snapshot) do the following to reset binary logs:

mysql> show binary logs; +------------------+-----------+ | Log_name | File_size | +------------------+-----------+ | mysql-bin.001087 | 242 | | mysql-bin.001088 | 242 | | mysql-bin.001089 | 242 | | mysql-bin.001090 | 9638 | | mysql-bin.001091 | 1538 | | mysql-bin.001092 | 242 | | mysql-bin.001093 | 242 | | mysql-bin.001094 | 1402 | | mysql-bin.001095 | 4314 | | mysql-bin.001096 | 2304 | | mysql-bin.001097 | 120 | +------------------+-----------+ 11 rows in set (0.00 sec) mysql> flush logs; Query OK, 0 rows affected (0.41 sec) mysql> show binary logs; +------------------+-----------+ | Log_name | File_size | +------------------+-----------+ | mysql-bin.001088 | 242 | | mysql-bin.001089 | 242 | | mysql-bin.001090 | 9638 | | mysql-bin.001091 | 1538 | | mysql-bin.001092 | 242 | | mysql-bin.001093 | 242 | | mysql-bin.001094 | 1402 | | mysql-bin.001095 | 4314 | | mysql-bin.001096 | 2304 | | mysql-bin.001097 | 167 | | mysql-bin.001098 | 120 | +------------------+-----------+ 11 rows in set (0.00 sec) mysql> purge binary logs to 'mysql-bin.001098'; Query OK, 0 rows affected (0.00 sec) mysql> show binary logs; +------------------+-----------+ | Log_name | File_size | +------------------+-----------+ | mysql-bin.001098 | 120 | +------------------+-----------+ 1 row in set (0.00 sec) |

Then take the snapshot by keeping tables in read lock with something like:

FLUSH TABLES WITH READ LOCK; \! lvcreate --snapshot --size 100M --name lvol98_save /dev/vg00/lvol98 or any snapshot command UNLOCK TABLES; |

Backup with no PITR capability

If you don’t want to activate binary logging and manage them or can afford to loose multiple hours of transaction you can simply perform a MySQL dump even once a week when your cluster is stabilized. Use a command like below to create a simple dump file:

[mysql@server1 ~] mysqldump --user=root -p --single-transaction --all-databases > /tmp/backup.sql |

Not mandatory parts to backup

JournalNodes

From Cloudera official documentation:

High-availabilty clusters use JournalNodes to synchronize active and standby NameNodes. The active NameNode writes to each JournalNode with changes, or “edits,” to HDFS namespace metadata. During failover, the standby NameNode applies all edits from the JournalNodes before promoting itself to the active state.

Those JournalNodes are installed only if your NameNode is in HA mode. They are preferred method to handle shared storage between your primary and standby NameNodes, this method is called Quorum Journal Manager(QJM).

Each time a new edits file is created or modified on primary NameNode it is also written on maximum (quorum) of JournalNodes. Standby NameNode constantly monitor the JournalNodes for any changes and apply them to its own namespace to be ready to failover primary NameNode in case of failure. All JournalNodes store more or less same files (edits_xx files and edits_inprogress_xx file) as NameNodes except that they do not have the checkpoint fsimages_xx results. You must have three or more (odd number) JournalNodes for high availability and to handle split brain scenarios.

The working directory of JournalNodes is defined by:

- dfs.journalnode.edits.dir = /var/qjn

On one JournalNode the real directory will be (cluster name is the name of your cluster that has been chosen at installation):

[root@journalnode01 ~]# ll -rt /var/qjn/<cluster name>/current . . . -rw-r--r-- 1 hdfs hadoop 1006436 Jan 18 12:13 edits_0000000000433896848-0000000000433901168 -rw-r--r-- 1 hdfs hadoop 133375 Jan 18 12:15 edits_0000000000433901169-0000000000433901822 -rw-r--r-- 1 hdfs hadoop 133652 Jan 18 12:17 edits_0000000000433901823-0000000000433902395 -rw-r--r-- 1 hdfs hadoop 918778 Jan 18 12:19 edits_0000000000433902396-0000000000433906383 -rw-r--r-- 1 hdfs hadoop 801672 Jan 18 12:21 edits_0000000000433906384-0000000000433910273 -rw-r--r-- 1 hdfs hadoop 76329 Jan 18 12:23 edits_0000000000433910274-0000000000433910699 -rw-r--r-- 1 hdfs hadoop 90404 Jan 18 12:25 edits_0000000000433910700-0000000000433911201 -rw-r--r-- 1 hdfs hadoop 48435 Jan 18 12:27 edits_0000000000433911202-0000000000433911468 -rw-r--r-- 1 hdfs hadoop 882923 Jan 18 12:29 edits_0000000000433911469-0000000000433915208 -rw-r--r-- 1 hdfs hadoop 1048576 Jan 18 12:31 edits_inprogress_0000000000433915209 -rw-r--r-- 1 hdfs hadoop 8 Jan 18 12:31 committed-txid |

So as such JournalNodes do not contains any required information that can be inherited from NameNode so nothing to backup…

Parts nice to backup

HDFS

In essence your Hadoop cluster has surely been built to handle Terabytes, not to say Petabytes, of data. So doing a backup of all your HDFS data is technically not possible. First HDFS is replicating each data block (of dfs.blocksize in size, 128MB by default) multiple times (parameter is dfs.replication and is set to 3 in my case and you have surely configured what is call rack awareness. Means your worker nodes are physically in different racks in your computer room.

So in other words is you loose one or multiple worker nodes or even a complete rack of your Hadoop cluster this is going to be completely transparent to your application. At worst you might suffer from a performance decrease but no interruption to production (ITP).

But what if you loose the entire data center where is located your Hadoop cluster ? We initially had the idea to split our cluster between two data center geographically separated by 20-30 Kilometers (12 to 18 miles) but this would require a (dedicated) low latency high speed link (dark fiber or else) between the two data centers which is most probably not cost effective…

This is why the most implemented architecture is a second smaller cluster in a remote site where you will try to have a copy of your main Hadoop cluster. This copy can be done by provided Haddop tool called DistCp or simply by running the exact same ingestion process on this failover cluster…

Running the same ingestion process on two distinct clusters might sound a bad idea but if you store your source raw files on a low cost NFS filer then, first, you can easily backup them to tape. Secondly, you can use same exact copy from two (or more) Hadoop cluster and in case of crash or consistency issue you are able to restart the ingestion from raw files. The secondary cluster can then be, with no issue, smaller that the primary one as only ingestion will run on it. Interactive queries and users will remain on primary cluster…

Here I have not at all mentioned HDFS snapshot because for me it is not a all a backup solution ! This is not different from a NFS snapshot and the only case you handle with this is human error. in case of a hardware failure or a data center failure this HDFS snapshot will be of no help as you will loose it at same time of the crash…

References

- A Guide to Checkpointing in Hadoop

- Get Ready to Backup the HDFS Metadata

- Backing Up and Restoring NameNode Metadata

- HDFS Commands

- HDFS Commands Guide

- Journalnodes

- Quorum-based Journaling in CDH4.1

- Hadoop architectural overview

- Hadoop architectural overview

- HDFS Metadata Directories Explained

Jürgen Wich says:

Hi Yannick, very interesting article. One question relating NN backup: Cloudera suggests using hdfs dfsadmin -fetchImage backup_dir to make a backup of fsimage and backing up the VERSION file is sufficient to have a consistent backup of NN. So it seems, that it is not necessary to backup the whole directory with all the edit files to make a desaster recovery. Do you also agree?

Yannick Jaquier says:

Hi Jürgen,

Thanks for nice comment !

Yes they are certainly right (they own the product so who am I ?). I have tried the hdfs dfsadmin -fetchImage command on my standby NN and it works fine obviously without entering in safe mode. This is most probably sufficient even if the created file is not readable as is so difficult to say what it contains. Also as said in my blog post there is no clear direction from Cloudera on how to backup so…

But overall my /hadoop/hdfs/current/ directory is only a bit more than 3GB for a bit more of 200TB of HDFS.

Will you really take any risk for few GB to backup (belt and brasses approach) ?